Preparatory tests

IHE has a collection of tools for testing implementations of actors in IHE profiles. You will find links to all tools for testing IHE profiles and their test descriptions on the complete Index to IHE Test Tools

The test cases in this section are for a subset of IHE's tools and are generally organized in the menu at left according to the tool that is used.

User guides for tools associated with these test cases are here: http://gazelle.ihe.net/content/gazelle-user-guides

If you are preparing for an IHE Connectathon, Gazelle Test Management for your testing event contains a list of the Preparatory tests you must perform, customized according to the IHE profiles/actors/options you have registered to test. You can find your list of Preparatory tests in Gazelle Test Management under menu Testing -->Test execution. (For IHE European Connectathons, Gazelle Test Management is at https://gazelle.ihe.net/gazelle/home.seam; other testing events may have a diffeent URL).

ATNA tests

This section contains test cases performed with the Gazelle Security Suite tool:

- Link to tool: http://gazelle.ihe.net/gss

- User Guide: https://gazelle.ihe.net/gazelle-documentation/Gazelle-Security-Suite/user.html

- Overview of tool features: recording

- Log in to post comments

11099: Read ATNA Resources page

--> Prior to performing ATNA tests, please read this page for guidelines that address frequently asked questions about testing expectations. <--

THIS PAGE APPLIES TO ATNA TESTING AT 2024 IHE CONNECTATHONs.

- ATNA Requirements

- Gazelle Security Suite (GSS) tool for ATNA testing

- Security Policy (TLS & audit) for the 2022 IHE EU/NA Connectathon

- GSS: Digital Certificates for IHE Connectathons

- GSS: ATNA Questionnaire

- GSS: ATNA Logging Tests - TLS Syslog

- Questions about ATNA testing?

- Evaluation

ATNA Requirements

The ATNA requirements are in the IHE Technical Framework:

- ITI Technical Framework, Vol 1, Section 9 - ATNA Profile.

- ITI Technical Framework Vol 2, for the [ITI-19] and [ITI-20] transactions.

- The RESTful ATNA (Query and Feed) Trial Implementation Supplement specifies additional features.

NOTE: The folloing options were retired in 2021 via CP-ITI-1247 and are no longer tested at IHE Connectathons:

- STX: TLS 1.0 Floor with AES Option

- STX: TLS 1.0 Floor using BCP195 Option

Gazelle Security Suite (GSS) tool for ATNA testing:

Tool-based testing of TLS (node authentication) and of the format and transport of your audit messages is consolidated in one tool - the Gazelle Security Suite (GSS).

- Link to the tool: http://gazelle.ihe.net/gss.

- Instructions for use of the tool are contained in ATNA test descriptions - here.

Security Policy (TLS & audit) for the 2024 IHE EU/NA Connecthon

In order to ensure interoperability between systems doing interoperability (peer-to-peer) testing over TLS (e.g. XDS, XCA...) the Connectathon technical managers have selected a TLS version and ciphers to use for interoperability tests during Connectathon week. (This is analagous to a hospital mandating similar requirements at a given deployment.)

TLS POLICY for [ITI-19]:

*** For IHE Connectathons, interoperabily testing over TLS shall be done using:

- TLS 1.2

- cipher suite - any one of:

- TLS_DHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_DHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- A digital certificate, issued by the Gazelle Security Suite (GSS) tool. See details below.

AUDIT MESSAGE POLICY for [ITI-20]:

Before 2020, an ATNA Audit Record Repository (ARR) was required to support receiving audit messages in both TLS syslog and UDP syslog. That meant that all Secure Node/Applications could send their audit messaes to any ARR.

Now, all actors sending and receiving audit messages may choose to support TLS Syslog, UDP Syslog, and/or FHIR Feed for transport. We expect that the Audit Record Repositories at the NA and EU Connectathons will provide good coverage of the options (TLS, UDP, FHIR), though some ARRs may support a subset. In particular, the FHIR Feed Option in ITI-20 may have less support because it was new as of 2020.

Connectathon technical managers will not select one transport for all audit records exchanged during Connectathon. Instead, Secure Node/Applications will choose ARRs for test partners that are compatible with the audit records they send in ITI-20. Gazelle Test Management will show compatible partners for ITI-20 interoperability tests: "ATNA_Logging_*.

==> GSS: Digital Certificates for IHE Connectathons

The Gazelle Security Suite (GSS) tool is the SINGLE PROVIDER OF DIGITIAL CERTIFICATES for IHE Connectathons.

To obtain a digital certificate from the GSS tool for preparatory & Connectathon testing, follow the instructions in test 11100. That test contains instructions that apply to an IHE Connectathon, whether face-to-face or online.

Some facts about the digital certificates for Connectathon testing:

- The digital certificate you generate in GSS:

- is from Certificate Authority (CA) with a key of 2048 length. You must add the certificate for the new CA in your trust store.

- will contain the fully-qualified domain name (FQDN) of your Connectathon test system. When you use GSS to request the certificate, the tool will prompt you for this value. The FQDN value(s) will be in the subjectAltName entry of your digital certificate. (You may need to provide more than one FQDN when you generate your certificate, e.g., if you will use your system to test TLS connections outside of the Connectathon network, such as using the NIST XDS Tools in your local test lab.)

- Test 11100 contains detailed instructions for generating your certificate, including how to get the fully-qualified domain name for your test system.

- Item (1.b.) means that each system testing TLS transactions during Connectathon week will have a digital certificate that is compatible with the 'FQDN Validation Option' in ATNA. Thus, TLS connections with test partners will work whether the client is performing FQDN validation, or not. This is intentional.

- The certificates are only for testing purposes and cannot be used outside of the IHE Connectathon context.

==> GSS: ATNA Questionnaire

Systems testing ATNA are required to complete the ATNA Questionnaire in the GSS tool, ideally prior to Connectathon week. Embedded in the questionnaire are Audit Record tests and TLS tests customized for the profiles & actors you registered to test at Connectathon.

- Follow instructions in test 11106.

==> GSS: ATNA Logging Tests - ATX: TLS Syslog Option

Read the Technical Framework documentation; you are responsible for all requirements in Record Audit Event [ITI-20] transaction. We will not repeat the requirements here.

WHICH SCHEMA???: The Record Audit Event [ITI-20] specifies use of the DICOM schema for audit messages sent using the ATX: TLS Syslog and ATX: UDP Syslog options. The DICOM schema is found in DICOM Part 15, Section A.5.1.

- The Gazelle Security Suite tool uses the DICOM schema with IHE modifications. The schema used by the GSS tool is: https://gazelle.ihe.net/XSD/IHE/ATNA/dicom_ihe_current.xsd

We expect implementations to be compliant; we have tested audit messages using the DICOM schema at IHE Connectathons since 2016.

- The GSS tool will only provide validation against the DICOM schema. If you fail that test, it is our signal to you that your audit messages are not compliant with the latest DICOM schema. See test 11116.

- We expect interoperability testing at the Connectathon to occur using audit records that are compliant with the DICOM schema.

SENDING AUDIT MESSAGES: You can send your audit records to the GSS tool simulating an Audit Record Repository. See test 11117.

Questions about ATNA Testing?

Contact the Technical Project Manager for the IT Infrastructure domain. Refer to the Contact Us page.

Evaluation

There is no specific evaluation for this test.

Create a text file stating that you found and read the page. Upload that text file into Gazelle Test Management as the Log Return file for test 11099.

- Log in to post comments

11100: Obtain Digital Certificate for TLS Testing

Overview of the test

This test contains instructions for obtaining a digital certificate for your test system that is registered for an IHE Connectathon. You will obtain your digital certificate(s) from the Gazelle Security Suite tool.

Prerequisites for this test

First, please read the ATNA Testing Resources page before proceeding with this test. That page contains important context for using the digital certificates for Connectathon-related tests.

When you generate your digital certificate in Gazelle Security Suite, you will need to know two values:

(1) The hostname(s) for your test system:

- For IHE Connectathons face-to-face: The hostname(s) are assigned to your test system by Gazelle Test Management. (See https://gazelle.ihe.net/TM/ for the 2022 IHE Connectathon; the link may differ for other testing events).

To find the hostname for your test system, log into Gazelle Test Management, then select menu Preparation-->* Network Interfaces. - For IHE Connectathons Online This is the public hostname(s) for your test system. For Connectathons Online, hostname and IP addresses are determined by the operator of the test system. (The operator still shares its hostname(s) with other participants using Gazelle Test Management.)

(2) Domain Name:

- For IHE Connectathons face-to-face: The domain name of the Connectathon network. This information is published by the Technical Manager of each IHE Connectathon. (E.g., for the IHE Connectathon 2022, the Domain Name is ihe-europe.net).

- For IHE Connectathons Online: Your public domain name.

Location Gazelle Security Suite (GSS) tool

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your Connectathon. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

- The European CAS is linked to Gazelle Test Management at http://gazelle.ihe.net/TM/ <---This will be used for the 2022 IHE EU/NA Connectathon

- The North American CAS is linked to Gazelle Test Management at https://gazelle.iheusa.org/gazelle-na/

- If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page (http://gazelle.ihe.net/gss) find the "Login" link at the upper right of the page.

- Select either "European Authentication" or "North American Authentication"

- Enter the username and password from either Gazelle Test Management linked above.

Instructions - Obtain a Certificate

- In GSS, select menu PKI-->Request a certificate

- Complete the fields on page:

- Certificate type: Choose "Client and Server" from dropdown list (Required field)

- key size: 2048

- Country (C): (required)

- Organization (O): Your organization name in Gazelle Test Management (Required field)

- Common Name (CN): The Keyword for your test system in Gazelle Test Management (eg EHR_MyMedicalCo) (Required field)

- Title: (optional)

- Given name: (optional)

- Surname: (optional)

- Organizational Unit: (optional)

- eMail: (optiional) email of a technical contact making the request

- Subject Alternative Names:

- You must enter at least one value in this field: the fully-qualified domain name of your test system.

- For a face-to-face Connectathon, this is a combination of the hostname of your test system and the domain name. (See the Prerequisites section above)

- E.g., for Connectathon network, the hostname of your system might be acme0, and the domain name might be ihe-test.net. So, an example of a fully-qualified domain name entered in this field for a digital certificate is acme0.ihe-test.net

- This value may contain additional fully-qualified domain name(s) for your test system when it is operating outside of a face-to-face Connectathon, e.g. when you are testing with the NIST XDS Tools in your home test lab, or if you are participating in an online Connectathon.

- If you have more than one hostname, multiple values are separated by a comma.

- Click the "Request" button.

- You will then be taken to a page listing all requested certificates. Find yours on the top of the list, or use the filters at the top.

- In the "Action" column, click the "View Certificate" (sun) icon. Your certificate details are displayed. Use the "Download" menu to download your certificate and/or the Keystore.

It is also possible to find your certificate using the menu:

- Select menu PKI-->List certificates

- In the "Requester" column, filter the list by entering your username at the top of the column (the username you used to log in to GSS)

- Use the icon in the "Action" column to find and download your certificate, as described above.

You are now ready to use this certificate for performing:

- authentication tests with the Gazelle Security Suite tool

- interoperability (peer-to-peer) tests with your Connectathon partners

Evaluation

There is no specific evaluation for this test.

Create a text file stating that you have requested & received your certificate(s). Upload that text file into Gazelle Test Management as the Log Return file for test 11100.

In subsequent tests (eg 11109 Authentication test), you will verify the proper operation of your test system with your digital certificate.

- Log in to post comments

11106: ATNA Questionnaire

Overview of the test

In this test you complete a form which collects information that will help us evaluate the Audit Logging and Node Authentication (ATNA) capabilities of your test system.

The contents of your ATNA Questionnaire are customized based on the the profiles and actors that you have registered in Gazelle Test Management for a given testing event (e.g. an IHE Connectathon). Depending on which profiles/actors you have registered for, the ATNA Questionnaire will ask you to validate audit messages for transactions you support, and you will be asked to demonstrate successful TLS connections for the transports you support (eg DICOM, MLLP, HTTP).

Prerequisites for this test

Before you can generate your on-line ATNA questionnaire:

- You must have a test system registered in Gazelle Test Management for an upcoming testing event.

- (See https://gazelle.ihe.net/TM/ for the 2022 IHE Connectathon; the link may differ for other testing events).

- Your test system must be registered to test an ATNA actor, e.g., Secure Node, Secure Application, Audit Record Repository.

- Your test system must have a status of "Completed" in Gazelle Test Management.

- This is because the content of the Questionnaire is build based on the profiles & actors you support. We want to know that your registration is complete.

- To check this, log in to Gazelle Test Management.

- Select menu Registration.

- On the System summary tab for your test system, you must set your Registration Status to "Completed" before you start your ATNA Questionnaire.

Location of the ATNA Tools: Gazelle Security Suite (GSS)

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your testing event. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

- The European CAS is linked to Gazelle Test Management at http://gazelle.ihe.net/TM/ <---This will be used for the 2022 IHE EU/NA Connectathon

- The North American CAS is linked to Gazelle Test Management at https://gazelle.iheusa.org/gazelle-na/

- If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page (http://gazelle.ihe.net/gss) find the "Login" link at the upper right of the page.

- Select either "European Authentication" or "North American Authentication"

- Enter the username and password from either Gazelle Test Management linked above.

Instructions

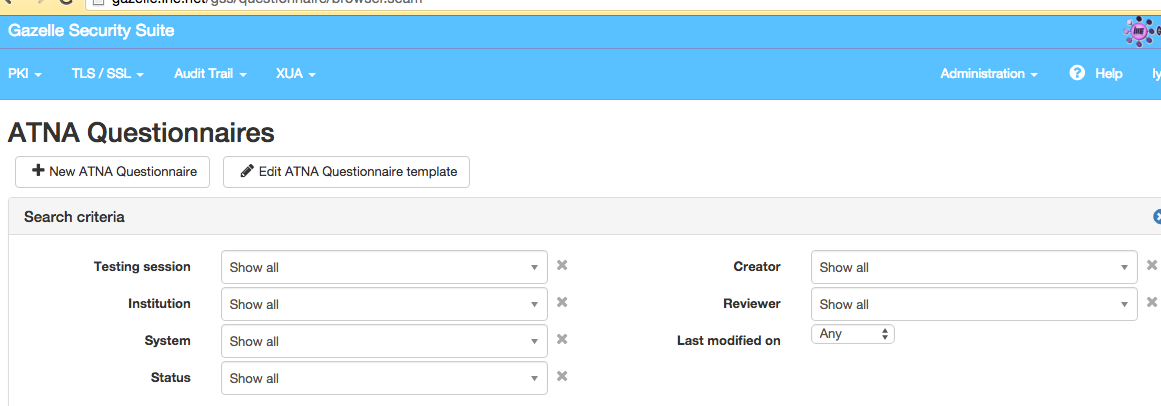

1. In GSS, select menu Audit Trail --> ATNA Questionnaires

2. First, search for any existing questionnaires for your organization. Use the filters at the top of the page to search based on various criteria. You will only be able to access the questionnaires created for your organization's test systems. Admins and monitors can access all of them.

3. You can use the icons in the right 'Actions' column to:

- View the content of the questionnaire

- Edit it

- Delete it (administrators only)

- Review it (monitors only)

![]()

4. If no questionnaire is available for your test system, you need to create a new one.

- Click on the "New ATNA Questionnaire" button

- From the dropdown list, select the name of your test system. Note: If your system doesn't appear...

- ...is your test system registered with status of "Completed"?

- ...are you registered for ATNA Secure Node or Secure Application?

- ...is the testing session closed (ie is the connectathon over)?

- Next, click the "Back to list" button. Use the filter at the top to find your questionnaire in the list. Use the "Edit" icon in the "Action" column to begin.

5. Complete the questionnaire. You are now in the ATNA Questionnaire Editor.

- In the System details, identify the ATNA actor you support. Choose either "Secure Node (SN)" or "Secure Application (SA)"

- Complete the "Inbound network communications" tab

- Complete the "Outbound network communications" tab

- Complete the "Authentication process for local users" tab

- Complete the "Audit messages" tab. This tab is used with test 11116.

- Secure Nodes only: Complete the "Non network means for accessing PHI" tab

- Complete the "TLS Tests" tab. This tab is used with test 11109.

6. Mark your questionnaire "Ready for review"

- When all tabs in the questionnaire are complete, set the Questionnaire's status to "Ready for review" in the "Questionnaire details" section. This is a signal that you have completed your work; we do not want to have monitors evaluating incomplete questionnairs.

Evaluation

Depending on the testing event, the results of this test may be reviewed in advance. More typically, it will be reviewed and graded by a Monitor during the test event itself (e.g. during Connectathon week).

Note: You cannot get connectathon credit (i.e. a "Pass") for your ATNA Secure Node/Application without completing and submitting your questionnaire.

- Log in to post comments

11109: Authentication Test

- Prerequisites

- Overview

- Gazelle Security Suite

- Instructions for clients

- Instructions for servers

- Evaluation

Prerequisites for this test

(1) Read the ATNA Testing Resources page before proceeding with this test.

(2) To perform this test, your digital certificate must be set up on your system (server and/or client). Follow the instructions in test 11000 to obtain digital certificate(s) for your test system(s).

(3) You should create your ATNA Questionnaire (test 11106) prior to running this test.

- The ATNA Questionnaire has a "TLS Tests" tab that identifies the inbound /outbound communications you support.

- That tab determines which of the "Server" and "Client" tests that you must run below.

- You will also record your successful results on that tab.

Overview of the test

In this test, you will use the Gazelle Security Suite (GSS) tool (https://gazelle.ihe.net/gss) to verify that you are able to communicate with TLS clients and servers using digital certificates.

The GSS tool contains multiple client and server simulators that check:

- transport over TLS v1.2, including protocol (DICOM, HL7/MLLP, HTTPS/WS, or syslog)

- cipher suite (TLS_DHE_RSA_WITH_AES_256_GCM_SHA384, and more....),

- certificate authentication

- Digital certificates for pre-Connectathon & Connectathon testing are generated by GSS. See test 11100.

The TLS simulators available in the GSS tool are listed in Column 1 in the following table, along with notes on which you should use for this test:

| Simulator Names (keyword) | To be tested by... |

Simulator configuration |

|

-- Server DICOM TLS 1.2 Floor -- Server HL7 TLS 1.2 Floor -- Server HTTPS/WS TLS 1.2 Floor -- Server Syslog TLS 1.2 Floor |

Connectathon test system that supports the "STX: TLS 1.2 Floor option" and is a client that... -- Initiates a TLS connection with DICOM protocol -- Initiates a TLS connection with MLLP protocol (i.e. HL7 v2 sender) -- Initiates a TLS connection for a webservices transaction -- Initiates a TLS connection to send an audit message over TLS syslog |

TLS 1.2 with 4 'strong' ciphers:

You may test with just one of the ciphers. |

|

-- Server RAW TLS 1.2 INVALID FQDN |

Connectathon test system that is a client supporting the "FQDN Validation of Server Certificate option" |

TLS 1.2 with 4 'strong' ciphers; see list above. Certificate has an invalid value for subjectAltName. |

|

-- Client TLS 1.2 Floor |

Connectathon test system that supports the "STX: TLS 1.2 Floor option" and is a server that... -- Accepts a TLS connection with DICOM protocol -- Accepts a TLS connection with MLLP protocol (i.e. HL7 v2 responder) -- Accepts a TLS connection for a webservices transaction -- Accepts a TLS connection to receive an audit message over TLS syslog |

TLS 1.2 with 4 'strong' ciphers; see list above. |

Location Gazelle Security Suite (GSS) tool:

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your testing event. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

- The European CAS is linked to Gazelle Test Management at http://gazelle.ihe.net/TM/ <---This will be used for the 2022 IHE EU/NA Connectathon

- The North American CAS is linked to Gazelle Test Management at https://gazelle.iheusa.org/gazelle-na/

- If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page (http://gazelle.ihe.net/gss) find the "Login" link at the upper right of the page.

- Select either "European Authentication" or "North American Authentication"

- Enter the username and password from either Gazelle Test Management linked above.

Instructions for outbound transactions (Client side is tested)

If your test system (SUT) does not act as a client (i.e., does not initiate any transactions), then skip this portion of the test and only test the Server side below).

If your SUT acts as a client, you must be able to access to TLS server's public IP. You have to test your client by connecting to Server Simulators in the Gazelle Security Suite tool.

1. On the home page for the Gazelle Security Suite, select menu TLS/SSL-->Simulators-->Servers to find the list of server simulators. There are servers for different protocls (DICOM, HL7...) and for different ATNA options (e.g., TLS 1.2 Floor...).

- You will test only the protocols you support -- those listed on the "TLS Tests" tab of your ATNA questionnaire.

2. Configure your client to connect to the test TLS server.

3. Check that the server is started before trying to connect to it. Click on the link for the server you want and look for status "Running"

4. In your SUT, perform a connection (eg send a query) to the test server. The TLS connection is valid, but at transaction level you will get invalid replies because we are only checking for the TLS connection.

5. You should then get a time-stamped entry in the results list at the bottom of the page. Blue dot means OK, red NOT OK.

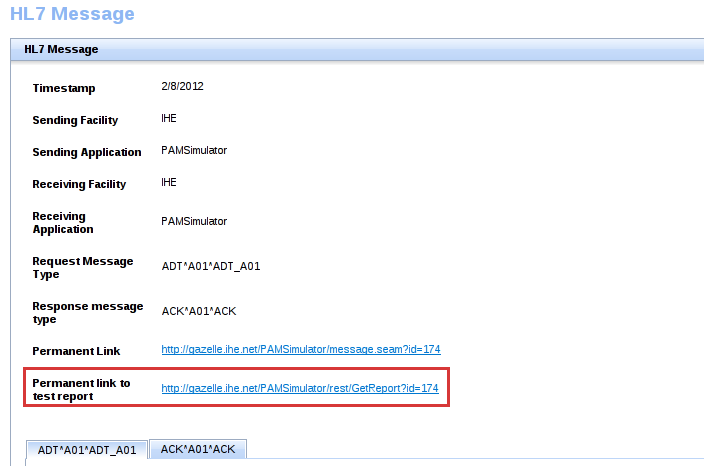

5. For each successful connection, view the result with the icon in the "Action" column. Copy the Permanent link (URL) to the result into your ATNA Questionnaire, on the "TLS Tests" tab The link must be formatted like https://.../connection.seam?id=...

6. Repeat these steps for each supported protocol (HL7v2 , DICOM, Syslog server ...) : e.g., if your system has no DICOM capabilities, you can skip that portion of the test.

Instructions for inbound transactions (Server side is tested)

If your test system (SUT) does not act as a server (i.e., does not respond to any transactions initiated by others), then skip this portion of the test and only perform the Client test above).

If your SUT acts as a server (i.e. a responder to IHE transactions), your server must be accessible from the outside so that the GSS tool, as a client simulator, can connect to your SUT.

1. On the home page for the Gazelle Security Suite, select menu TLS/SSL-->Simulators-->Clients to find the list of client simulators.

2. In the "Start Connection" section of the page, you will have to specify, for each supported protocol :

- Client type : protocol supported (HL7, DICOM, WS, SYSLOG, or RAW)

- You will test only the protocols you support -- those listed on the "TLS Tests" tab of your ATNA questionnaire.

- Target host : public IP of your server

- Target port : public port of your server

3. Then click on "Start client".

4. You should then get a time-stamped entry in the results list. Blue means OK, red NOT OK.

5. For each successful connection, view the result at the bottom of the page using the icon in the "Actions" column. Copy the Permanent Link (URL) to the result into your ATNA Questionnaire, on the "TLS Tests" tab. The link must be formatted like https://.../connection.seam?id=...

6. Repeat these steps for each supported protocol (HL7v2, DICOM, Syslog client, ...) : e.g., if your system has no DICOM capabilities, you can skip that portion of the test.

Evaluation

Depending on the testing event, the results of this test may be reviewed in advance. More typically, it will be reviewed and graded by a Monitor during the test event itself (e.g. during Connectathon week).

The tool reports success or failure for each test you perform. Your test system must demonstrate successful TLS handshake for each inbound and outbound protocol you support.

If you are performing this test in preparation for an IHE Connectathon, a Connectathon monitor will verify your results as follows. The monitor will:

- Access the TLS tests tab in the ATNA Questionnaire. (The SUT only performs tests for the protocols it supports, and skips the ones not supported.)

- For each "SERVER" tested side :

- The test result must be PASSED.

- During a Connectathon, these items can also be verified:

- the SUT host must be the IP specified in the configuration of the system.

- the SUT port must be the one specified in the configuration of the system for the protocol.

- For each "CLIENT" tested side :

- The connection must succeed (blue dot).

- During a Connectathon, this item can also be verified:

- the host in the SUT address must be the IP specified in the configuration of the system. The port is not verified for outbound transactions.

- During the Connectathon, the monitor may choose to ask the vendor to re-run a test if the results raise questions about the system's support of TLS.

- Log in to post comments

11110: Authentication error cases

Overview of the test

*** If your ATNA Secure Node/Secure Application is only a client (ie it only initiates transactions), then this test case is not applicable for you. Skip it. ***

This test exercises several error cases. You will use the TLS Tool in the Gazelle Security Suite as a simulated client, trying to connect to a Secure Node (SN) or Secure Application (SA) acting as a server.

Prerequisite for this test

Perform test 11109 Authentication Test before running this 'error cases' test.

Location of the ATNA Tools: Gazelle Security Suite

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your testing event. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

- The European CAS is linked to Gazelle Test Management at http://gazelle.ihe.net/TM/ <---This will be used for the 2022 IHE Connectathon

- The North American CAS is linked to Gazelle Test Management at https://gazelle.iheusa.org/gazelle-na/

- If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page (http://gazelle.ihe.net/gss) find the "Login" link at the upper right of the page.

- Select either "European Authentication" or "North American Authentication"

- Enter the username and password from either Gazelle Test Management linked above.

Instructions

- Select menu TLS/SSL-->Testing-->Test Cases

- Run each of the error test cases listed:

- IHE_ErrorCase_Corrupted

- IHE_ErrorCase_Expired

- IHE_ErrorCase_Revoked

- IHE_ErrorCase-Self-Signed

- IHE_ErrorCase_Unknown

- IHE_ErrorCase_Without_Authentication

- IHE_ErrorCase_Wrong_Key

- Once you are on the 'Run a test' page, use the 'Client type' dropdown list to select the transport supported on your server (HL7v2, DICOM, HL7, DICOM_ECHO, WEBSERVICE, SYSLOG, or RAW)

- Input the host / IP address and port of your system and click on 'Run'.

- If you implement several transports as a server, you should mix message types over those error test cases in order to have at least one implemented protocol covered by one step. It is not necessary to run each of the test cases for each transport.

- After each test case, find your result in the list of Test Executions.

- Capture the permanent links to your PASSED results. Copy/paste the links into Gazelle Test Management as your results for test 11110.

Evaluation

Depending on the testing event, the results of this test may be reviewed in advance. More typically, it will be reviewed and graded by a Monitor during the test event itself (e.g. during Connectathon week).

Each error case must have a result of 'PASSED'.

Each transport type (HL7v2, DICOM, HL7, DICOM_ECHO, WEBSERVICE, SYSLOG, or RAW) implemented by your system as a server must have been tested at least one time in the list of error cases.

If you are performing this test in preparation for a Connectathon, a Connectathon monitor will verify your results pasted into each test step.

- Log in to post comments

11116: Audit message check

Overview of the test

This test applies to a Secure Node/Application that supports the ATX: TLS Syslog or ATX: TLS UDP Option.

In this test, a Secure Node or Secure Application tests audit messages it sends.

- We use the Gazelle Security Suite (GSS) tool to test the content of the audit message against the schema and against requirements documented in the IHE Technical Framework for some transactions.

- In this test, we do not test the transport of the audit message (TLS or UDP).

The Gazelle Security Suite tool provides the ability to validate audit messages against the DICOM schema and the audit message definitions for many transactions in IHE Technical Frameworks. (We are not longer testing the RFC 3881 schema; the ATNA profile requires support for the DICOM schema for syslog audit messages sent via ITI-20.)

Location of the ATNA Tools: Gazelle Security Suite

- For European Connectathon: http://gazelle.ihe.net/gss

- For North America Connectathon: http://gazelle.iheusa.org/gss

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your testing event. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page find the "Login" link at the upper right of the page; enter your Gazelle username and password if asked. If you were already logged into another Gazelle application, GSS will silently login you.

Instructions

You may perform this test directly in the ATNA Questionnaire **or** you may use the Gazelle EVSClient tool. If you are preparing for an IHE Connectathon, you should use the instructions below for the ATNA Questionnaire.

---->Instructions for checking audit messages using the ATNA Questionnaire:

- Create a new ATNA Questionnaire for your test system using the instructions for test 11106.

- Find the Audit Messages tab in the questionnaire. That tab contains "Instructions" and enables you to upload and validate audit messages directly on that tab.

- You should validate all messages that you have marked "Implemented".

- When you are done, find the Permanent Link to the your ATNA Questionnaire. Copy/paste that link into the chat window in Gazelle Test Management for test 11116.

---->Instructions for checking audit messages using the EVSClient tool:

- In the Gazelle EVSClient, select menu IHE-->Audit messages-->Validate

- Select the Add button, and upload the XML file for your audit message

- From the Model based validation dropdown list, select the entry that matches your audit message. (Note that additional validations will be added over time.)

- Select the Validate button.

- You should validate all audit messages associated with functionality & transactions supported by your test system.

- In the Validation Results displayed, find the Permanent Link to the results. Copy/paste the link(s) into the chat window in Gazelle Test Management for 11116.

Evaluation

Depending on the testing event, the results of this test may be reviewed in advance. More typically, it will be reviewed and graded by a Monitor during the test event itself (e.g. during Connectathon week).

The tool reports the results of the validation of your messages. We are looking for PASSED results.

- Log in to post comments

11117: Send audit or event message to Syslog Collector

Overview of the test

In this test, a client sends audit records or event reports using transaction [ITI-20] Record Audit Event to the Syslog Collector tool acting as an Audit Record Repository or Event Repository. The Syslog Collector is one of the tools embedded in the Gazelle Security Suite.

This test is performed by an ATNA Secure Node, Secure Application or Audit Record Forwarder. It is also performed by a SOLE Event Reporter.

Note that this test checks the transport of audit messages. The content of your audit message is verified in a different test.

Location of the ATNA Tools: Gazelle Security Suite (GSS)

Log in to the GSS tool

When logging in to GSS, you will use your username & password from Gazelle Test Management for your testing event. There are separate CAS systems for different instances of Gazelle Test Management, and you will have to take this into account when logging in to GSS:

- The European CAS is linked to Gazelle Test Management at http://gazelle.ihe.net/TM/ <---This will be used for the 2022 IHE Connectathon

- The North American CAS is linked to Gazelle Test Management at https://gazelle.iheusa.org/gazelle-na/

- If you don't have an account, you can create a new on the Gazelle Test Management home page.

On the GSS home page (http://gazelle.ihe.net/gss) find the "Login" link at the upper right of the page.

- Select either "European Authentication" or "North American Authentication"

- Enter the username and password from either Gazelle Test Management linked above.

Instructions

- Access the Syslog Collector in GSS under menu Audit Trail --> Syslog Collector. This page displays the tool's IP address and UPD and TCP-TLS ports.

- Configure your application to send your audit messages (event reports) to the Syslog Collector.

- Then trigger any event that initiate an ITI-20 transaction. This event may be an IHE transaction or other system activity (eg system start/stop or one of the SOLE events). Your system should finally send the message to the Syslog Collector.

- IMPORTANT NOTE: The Syslog Collector tool is a free, shared resource. It is intended for intended for brief, intermittent use. Developers SHOULD NOT configure their system to send syslog messages to the tool on a long-term basis. Flooding the tool with audit messages can make it unavailble for use by others.

Evaluation

You must check that your audit message has been received by the Syslog Collector and that the protocol SYSLOG is correctly implemented.

- Go to Gazelle Security Suite, on page Audit Trail > Syslog Collector.

- Filter the list of received messages by the host or the IP of the sender, and find the message you sent according to the timestamps.

- Click on the magnifying glass to display the message details.

- If the protocol is UDP or TLS, if there is a message, a message content, no errors and RFC5424 parsing succeeeded, then the test is successful. There is an example screenshot below.

- Copy the URL to your successful result and paste it into your local Gazelle Test Management as the Log Return file for test 11117.

- Do not forget to stop sending audit-messages to the Syslog Collector once you’ve finished the test. If your system sends a large amount of messages, administrators of the tool may decide to block all your incoming transactions to prevent spam.

Tips

TCP Syslog is using the same framing requirement as TLS Syslog. You can first use the TCP port of Syslog Collector to debug your implementation. Keep in mind that the IHE ATNA Profile expects at least UDP or TLS for actors that produce SYSLOG messages.

.

.

- Log in to post comments

11118: AuditEvent Resource check

THIS TEST IS UNDER CONSTRUCTION AND NOT YET AVAILABLE...

Overview of the test

This test applies to a Secure Node/Application that supports the ATX: FHIR Feed Option.

The RESTful ATNA TI Supplement, Section 3.20.4.2.2.1, defines a mapping between DICOM Audit Messages and FHIR AuditEvent Resources. Implementers should be creating their AuditEvent Resources according to the defined mappings, and expect that they will be examined according those mappings at IHE Connecthons.

- In test EVS_FHIR_Resource_Validation, a Secure Node or Secure Applicationis asked to use the Gazelle EVSClient tool to test the content of the AuditEvent Resource against the baseline FHIR Requirements.

- In this AuditEventResource check test: Gazelle tools will be enhanced to provide additional validation of AuditResource based on constraints in [ITI-20]. The RESTful ATNA TI Supplement, Section 3.20.4.2.2.1.That mapping will enable Gazelle tooling to verify specifics of the IHE-defined audit records that are sent as FHIR AuditEvent Resources.

Instructions

---->Instructions for checking additional constraints on AuditEvent Resources (mapping defifned in ITI TF-2b: 3.20.4.2.2.1):

- Instructions TBD when tool update is complete.

Evaluation

Depending on the testing event, the results of this test may be reviewed in advance. More typically, it will be reviewed and graded by a Monitor during the test event itself (e.g. during Connectathon week).

The tool reports the results of the validation of your Resources. We are looking for PASSED results.

- Log in to post comments

Do_This_First tests

This section contains test cases that contain instructions or other background material that prepares a test system for interoperability testing at an IHE Connectathon.

Ideally, the preparation described in these tests should be completed BEFORE the Connectathon begins.

ITI 'Do/Read This First' Tests

This is an index of Do This First and Read This First tests defined for profiles in the IHE IT Infrastructure (ITI) domain.

APPC_Read_This_First

Introduction

This informational 'test' provides an overview of APPC tests and defines value sets used when creating Patient Privacy Policy Consent documents during Connectathon. Please read what follows and complete the preparation described before the Connectathon.

Instructions

WHICH APPC TESTS SHOULD I RUN?

The APPC profile enables creating Patient Privacy Policy Consent documents of many, many variations. We have created test cases that cover some of the common use cases in the APPC profile for consent documents that would be commonly created for patients in health care facilities, eg consent disclose patient's data to an facility or to restrict disclosure of data from a specific individual provider.

Content Creators:

- Run tests for two of the APPC uses cases; you may choose from the Meta test which two you want to run. Test names are APPC_Case*.

- Run one test -- APPC_Other_Use_Case -- that allows you to create a Patient Privacy Policy Consent document of your choosing. We hope you use this to demonstrate the depth & breadth of your capabilities as a APPC Content Creator.

- Support your APPC Content Consumer test partners that want to demonstrate their View or Structured Policy Processing capabilities using your APPC document(s).

- Optional: There is no requirement in APPC that the Content Creator be able to act as an XDS Doc Source and thus be able to submit your APPC documents to an XDS Repository/Registry. If you have this capability, you can run test APPC_Submit_PolicyConsentDoc.

Content Consumers:

- If you support the View Option, we assume that you can render any APPC document from a Content Creator

- If you support the Structured Policy Processing Option, you will run the associated test.

HOW DO APPC DOCUMENTS GET FROM THE CONTENT CREATOR TO THE CONTENT CONSUMER?

The APPC Profile does not mandate how Consent documents get from a Content Creator to a Content Consumer. It could be XDS, another IHE profile, or a non-IHE method.

At the Connectathon, we ask Content Creators to upload the Patient Privacy Policy Consent documents it creates into the Samples area of Gazelle (menu Connectathon-->Connectathon-->List of samples, on the 'Samples to share' tab. Content Consumers will find uploaded samples under the same menu on the 'Samples available for rendering' tab.

WHICH PATIENT SHOULD BE USED FOR APPC TESTS?

Each APPC Patient Privacy Policy Consent document applies to a single PATIENT. In a consent document, the patient ID and assigning authority values are encoded with the AttributeId urn:ihe:iti:ser:2016:patient-id . A patient may have more than one privacy policy consent.

We do not identify a single 'test patient' that must be used for creating APPC documents for Connectathon testing. The Content Creator may include any valid Patient ID. If the policy restricts or allows access based on values the XDS metadata for a patient's documents, the Content Creator may use a Patient ID in for clinical document(s) the policy applies to.

WHAT PRIVACY POLICIES, & OTHER VALUE SETS ARE DEFINED FOR APPC TESTING?

APPC Patient Privacy Policy Consent documents rely on the affinity domain agreeing to a set of PATIENT PRIVACY POLICIES that apply to ORGANIZATIONS and INDIVIDUAL PROVIDERS. These policies, organizations, providers are identified by unique identifiers that are recognized within the affinity domain, and are encoded in a patient's consent document.

To enable the APPC Content Creator to specify different policies, this test defines values for various attributes used in policies:

- Policies - that a patient may apply to his/her documents

- Facilities - where a patient could receive care; facilities have an identified type.

- Providers - who provide care for a patient; providers have an identified role.

- Doc Sources - who create a patient's document(s) shared in an XDS environment

- Confidentiality Codes - applied to a patient's document that can be part of a policy

- Purpose of Use -

The tables below contain value sets that are defined for the purpose of Connectathon testing of the APPC profile.

- APPC tests will ask Content Creators to use these when creating APPC Patient Privacy documents.

- APPC Content Consumers should recognize these values.

POLICIES FOR CONNECTATHON TESTING OF APPC:

|

Policies: (APPC use case) |

PolicySetIdReference | Policy description |

|

FOUNDATIONAL POLICY (all use cases) |

urn:connectathon:bppc:foundational:policy |

By default, in this Connectathon affinity domain, document sharing is based on the value of Confidentiality Code (DocumentEntry.confidentialityCode). This policy (inherited from BPPC) is applied to all documents.

A patient may also choose to apply one of the additional policies below.

|

| FULL ACCESS TO ALL | urn:connectathon:policy:full-access | The patient agrees that the document may always be shared. (This is equivalent to having a confidentiality code of "U".) |

| DENY ACCESS TO ALL | urn:connectathon:policy:deny-access | The patient prohibits the document from ever being shared. (This is equivalent to having a confidentiality code of "V".) |

| DENY ACCESS EXCEPT TO PROVIDER | urn:connectathon:policy:deny-access-except-to-provider | The patient prohibits the document from being shared except with the provider(s) identified in the consent document. |

|

DENY ACCESS TO PROVIDER |

urn:connectathon:policy:deny-access-to-provider | The patient prohibits the document from being shared with the provider(s) identified in the consent document. The referenced individual provider(s) is prohibited from accessing this patient's documents (ie no read or write access). |

|

DENY ACCESS EXCEPT TO FACILITY |

urn:connectathon:policy:deny-access-except-to-facility | The patient prohibits the document from being shared except with the facility(ies) identified in the consent document. |

| DENY TO ROLE | urn:connectathon:policy:deny-access-to-role | The patient prohibits the document from being shared with providers who have the role(s) identified in the consent document |

| FULL ACCESS TO ROLE | urn:connectathon:policy:full-access-to-role | The patient allows the document to be shared with providers who have the role(s) identified in the consent document. The patient prohibits the document from being shared with providers with any other role(s). |

| LIMIT DOCUMENT VISIBILITY (use case 6) |

1.3.6.1.4.1.21367.2017.7.104 | The patient prohibits sharing the referenced clinical document(s) and this privacy policy consent document with any healthcare provider or facility. |

ORGANIZATIONS/FACILITIES defined in the "Connectathon Domain":

|

XACML AttributeId--> Facility |

urn:ihe:iti:appc:2016:document-entry:healthcare-facility-type-code (DocumentEntry.healthcareFacilityTypeCode) |

urn:oasis:names:tc:xspa:1.0:subject:organization-id |

| Connectathon Radiology Facility for IDN One | code=”Fac-A” displayName=”Caregiver Office” codeSystem=”1.3.6.1.4.1.21367.2017.3" |

urn:uuid:e9964293-e169-4298-b4d0-ab07bf0cd78f |

| Connectathon Radiology Facility for NGO Two | code=”Fac-A” displayName=”Caregiver Office” codeSystem=”1.3.6.1.4.1.21367.2017.3" |

urn:uuid:e9964293-e169-4298-b4d0-ab07bf0cd12c |

| Connectathon Dialysis Facility One | code=”Fac-B” displayName=”Outpatient Services” codeSystem=”1.3.6.1.4.1.21367.2017.3" |

urn:uuid:a3eb03db-0094-4059-9156-8de081cb5885 |

| Connectathon Dialysis Facility Two | code=”Fac-B” displayName=”Outpatient Services” codeSystem=”1.3.6.1.4.1.21367.2017.3" |

urn:uuid:be4d27c3-21b8-481f-9fed-6524a8eb9bac |

INDIVIDUAL HEALTHCARE PROVIDERS defined in the "Connectathon Domain":

|

XACML AttributeId--> Provider |

urn:oasis:names:tc:xacml:1.0:subject:subject-id | urn:oasis:names:tc:xspa:1.0:subject:npi | urn:oasis:names:tc:xacml:2.0:subject:role |

| Dev Banargee | devbanargee | urn:uuid:a97b9397-ce4e-4a57-b12a-0d46ce6f36b7 |

code=”105-007” |

| Carla Carrara | carlacarrara | urn:uuid:d973d698-5b43-4340-acc9-de48d0acb376 |

code=”105-114” |

| Jack Johnson | jackjohnson | urn:uuid:4384c07a-86e2-40da-939b-5f7a04a73715 | code=”105-114” displayName=”Radiology Technician” codeSystem="1.3.6.1.4.1.21367.100.1" |

| Mary McDonald | marymcdonald | urn:uuid:9a879858-8e96-486b-a2be-05a580f0e6ee | code=”105-007” displayName=“Physician/Medical Oncology” codeSystem="1.3.6.1.4.1.21367.100.1" |

| Robert Robertson | robertrobertson | urn:uuid:b6553152-7a90-4940-8d6a-b1017310a159 | code=”105-007” displayName=“Physician/Medical Oncology” codeSystem="1.3.6.1.4.1.21367.100.1" |

| William Williamson | williamwilliamson | urn:uuid:51f3fdbe-ed30-4d55-b7f8-50955c86b2cf | code=”105-003” displayName=“Nurse Practitioner” codeSystem="1.3.6.1.4.1.21367.100.1" |

XDS Document Sources:

|

XACML AttributeId--> Source Id: |

urn:ihe:iti:appc:2016:source-system-id (SubmissionSet.sourceId) |

| Use sourceId as assigned in Gazelle to Connectathon XDS Doc Sources | Various XDS Document Sources systems |

CONFIDENTIALITY CODES:

|

XACML AttributeId--> ConfidentialityCode: |

urn:ihe:iti:appc:2016:confidentiality-code (DocumentEntry.confidentialityCode) |

| normal | code=”N” displayName=”normal” codeSystem=”2.16.840.1.113883.5.25" |

| restricted | code=”R” displayName=”restricted” codeSystem=”2.16.840.1.113883.5.25" |

| very restricted | code=”V” displayName=”very restricted” codeSystem=”2.16.840.1.113883.5.25" |

| unrestricted | code=”U” displayName=”unrestricted” codeSystem=”2.16.840.1.113883.5.25" |

PURPOSE OF USE:

|

XACML AttributeId--> Purpose of use: |

urn:oasis:names:tc:xspa:1.0:subject:purposeofuse |

| TREATMENT | code=”99-101” displayName=”TREATMENT” codeSystem="1.3.6.1.4.1.21367.3000.4.1" |

| EMERGENCY | code=”99-102” displayName=”EMERGENCY” codeSystem="1.3.6.1.4.1.21367.3000.4.1" |

| PUBLICHEALTH | code=”99-103” displayName=”PUBLICHEALTH” codeSystem="1.3.6.1.4.1.21367.3000.4.1" |

| RESEARCH | code=”99-104” displayName=”RESEARCH” codeSystem="1.3.6.1.4.1.21367.3000.4.1" |

Evaluation

There is no evaluation for this informational test. If the systems testing the APPC Profile do not do the set-up described above, then APPCC tests at Connectathon will not work.

BPPC_Read_This_First

Introduction

This is an informational 'test'. We want all actors involved in testing the BPPC Profile and the BPPC Enforcement Option to read the "Privacy Policy Definition for IHE Connectathon Testing".

Instructions

Prior to arriving at the Connectathon, read this document: Privacy Policy Definition for IHE Connectathon Testing. This contains the policy for XDS Affinity Domains at the Connectathon, including 2 BPPC-related items.

Evaluation

There is no evaluation for this informational test. If the systems do not do the set-up described above, then BPPC Profile tests and BPPC Enforcement Options tests at Connectathon will not work.

CSD_Load_Directory_Test_Data

Introduction

This is a "task" (ie not a test) that ensures that your '''CSD Care Services Directory''' is loaded with the entries that we will use as part of Connectathon testing.

Instructions

The Care Services Directory is loaded with Connectathon test data: (1) Codes, and (2) Organization, Provider, Facility, and Service information.

(1) Load Connectathon code sets:

ITI TF-1:35.1.1.1 states, "Implementing jurisdictions may mandate code sets for Organization Type, Service Type, Facility Type, Facility Status, Provider Type, Provider Status, Contact Point Type, Credential Type, Specialization Code, and language code. A Care Services Directory actors shall be configurable to use these codes, where mandated."

For Connectathon testing, we define these codes and ask that you load them onto your Directory prior to arriving at the connectathon. They are documented in the format defined in IHE's SVS (Sharing Value Sets) profile, though support for SVS is not mandated in IHE.

The code sets are found here in Google Drive under IHE Documents > Connectathon > test_data > ITI-profiles > CSD-test-data > CSD_Directory_Codes. (They are also available in the SVS Simulator: http://gazelle.ihe.net/SVSSimulator/browser/valueSetBrowser.seam

- 1.3.6.1.4.1.21367.200.101-CSD-organizationTypeCode.xml

- 1.3.6.1.4.1.21367.200.102-CSD-serviceTypeCode.xml

- 1.3.6.1.4.1.21367.200.103-CSD-facilityTypeCode.xml

- 1.3.6.1.4.1.21367.200.104-CSD-facilityStatusCode.xml

- 1.3.6.1.4.1.21367.200.105-CSD-providerTypeCode.xml

- 1.3.6.1.4.1.21367.200.106-CSD-providerStatusCode.xml

- 1.3.6.1.4.1.21367.200.108-CSD-credentialTypeCode.xml

- 1.3.6.1.4.1.21367.200.109-CSD-specializationTypeCode.xml

- 1.3.6.1.4.1.21367.200.110-CSD-languageCode.xml

(2) Load Connectathon Organization, Provider, Facility, and Services entries

In order to perform query testing with predictable results, Care Services Directories must be populated with the entries in the following files here in Google Drive under IHE Documents > Connectathon > test_data > ITI-profiles > CSD-test-data > CSD_Directory_Entries.

Some directories may support only a subset of these entry types:

- CSD-Organizations-Connectathon-<date>.xml

- CSD-Providers-Connectathon-<date>.xml

- CSD-Facilities-Connectathon-<date>.xml

- CSD-Services-Connectathon-<date>.xml

(3) Additional Organization, Provider, Facility, and Services entries

The Connectathon entries are limited in scope. We expect Directories to be populated with additional Organization, Provider, Facility & Service entries. We give no specific guidance on the number of entries, but we are looking for a more realistic database. Good entries offer better testing opportunities.

Evaluation

Create a short text file saying that you have finished loading your codes. Upload that text file into Gazelle Test Management as the results for this 'test'. That is your signal to use that you are ready for Connectathon testing.

HPD: Load Provider Test Data for Connectathon testing

Introduction

At the Connectathon, the HPD tests assume that a pre-defined set of Organizational and Individual provider information has been loaded on all of the Provider Information Directory actors under test.

Instructions

- Prior to the Connectathon, the HPD Provider Information Directory must load this provider test data into your HPD Provider Information Directory:

- Connectathon tests depend on the presence of this test data. It is in the HPD_test_providers.xls file in Github here: https://github.com/IHE/connectathon-artifacts/tree/main/profile_test_data/ITI/HPD

- We expect that your Directory will also contain other provider information, beyond what is in this test set.

Evaluation

There are no result files to upload into Gazelle Test Management for this test. Preloading these prior to the Connectathon is intended to save you precious time during Connectathon week.

| Attachment | Size |

|---|---|

| 51.5 KB |

mCSD Load Test Data

Introduction

This is not an actual "test". Rather it is a task that ensures that the mCSD Care Services Selective Supplier is loaded with the Resources and value sets that we will use as part of Connectathon testing.

Instuctions for Supplier Systems

The instructions below apply to mCSD Supplier systems. (The mCSD Consumer actor is included on this test so that it is aware of this test mCSD test data, but it has no preload work to do. During Connectathon, the Consumer will be performing queries based on the content of these Resources.)

(1) Connectathon FHIR Resources

In order to perform query testing with predictable results, the Care Services Selective Supplier system must be populated with the entries from pre-defined FHIR Resources:

- Organization

- Location

- HealthcareService

- Practitioner

- PractitionerRoles

**Some Suppliers may support only a subset of these. **

These resources are available in two places (the test data is the same in both places, so you only need to access/load one set):

- In Github here: https://github.com/IHE/connectathon-artifacts/tree/main/profile_test_data/ITI/mCSD

--or--

- On the HAPI FHIR Read/Write Server deployed for Connectathon. (note that the link to this server be published by the technical manager of your testing event).

(2) Additional Resources

The pre-defined Connectathon test data are limited in scope. We expect Suppliers to be populated with additional Organization, Provider, Facility & Service Resources. We give no specific guidance on the number of Resources, but we are looking for a more realistic database. Good entries offer better testing opportunities.

(3) Value Sets for some codes:

The FHIR Resources for mCSD testing contain codes from some pre-defined ValueSet Resources.

These ValueSets are also found in Github and on the FHIR Read/Write Server at the links above.

| code | FHIR ValueSet Resource id |

| Organization Type | IHE-CAT-mCSD-organizationTypeCode |

| Service Type | IHE-CAT-mCSD-serviceTypeCode |

| Facility Type | IHE-CAT-mCSD-facilityTypeCode |

| Facility Status | IHE-CAT-mCSD-facilityStatusCode |

| Provider Type | IHE-CAT-mCSD-providerTypeCode |

| Provider Status | IHE-CAT-mCSD-providerStatusCode |

| Credential Type | IHE-CAT-mCSD-credentialTypeCode |

| Specialty Type | IHE-CAT-mCSD-specializationCode |

| Language | languages |

| Provider Qualification | v2-2.7-0360 |

The mCSD Resources also contain codes from these FHIR ValueSets:

- Language Codes: http://hl7.org/fhir/ValueSet/languages (with coding.system of urn:ietf:bcp:47)

- Provider Qualification Codes: https://www.hl7.org/fhir/v2/0360/2.7/index.html

Evaluation

There are no result files to upload into Gazelle Test Management for this test. Preloading these prior to the Connectathon is intended to save you precious time during Connectathon week.

mXDE_Read_This_First

Introduction

This is not an actual "test". The Instructions section below describe the testing approach for the mXDE Profile. It provides context and preparation information prior to performing Connectathon tests with your test partners.

Instructions

Please read the following material prior to performing Connectathon tests for the mXDE Profile.

Overall Assumptions:

(1) There is a significant overlap between the mXDE and QEDm profiles. Each mXDE actor must be grouped with its QEDm actor counterpart. Thus, you should successfully complete tests for QEDm before attempting mXDE tests.

(2) The mXDE Profile refers to extracting data from documents but does not specify the document types. For purpose of Connectathon testing, we will provide and enforce use of specific patients and specific documents. We intend to use the same clinical test data for both QEDm and mXDE tests. See details about test patients and documents in the QEDm ReadThisFirst and DoThisFirst tests.

- For mXDE, we will extract data from documents for patient Chadwick Ross.

- If our test data (CDA documents, FHIR Resources) are not compatible with your system, please let us know as soon as possible. We would prefer to use realistic test data. It is not our intention to have you write extra software to satisfy our test data.

- Some of the coded data with the documetns may use code systems that are not in your configuration. For example, they might be local to a region. You will be expected to configure your systems to use these codes just as you would at a customer site.

- We will require that the extraction process be automated on the mXDE Data Element Extractor system.

(3) The mXDE Data Element Extractor actor is grouped with an XDS Document Registry and Repository or an MHD Document Responder.

- When grouped with the XDS Document Registry and Repository, we will expect the Data Element Extractor to accept (CDA) documents that are submitted by XDS transactions to initiate the extraction process.

- When grouped with an MHD Document Responder, we will expect the Data Element Extractor to accept (CDA) documents submitted to an MHD Document Recipient to initiate the extraction process.

- In summary, you may not extract from your own internal documents. You must use the Connectathon test data provided.

(4) The tests reference several patients identifed in QEDm: Read_This_First. These same patients are used for mXDE tests. The Data Element Extractor may choose to reference the patients on the Connectathon FHIR Read/Write Server or may import the Patient Resources and host them locally.

(5) The Provenance Resource is required to contain a reference to the device that performed the extraction. Because the device is under control of the Data Element Extractor, the Data Element Extractor will be required to host the appropriate Device Resource. You are welcome to use multiple devices as long as the appropriate Device resources exist. (See QEDm Vol 2, Sec 3.44.4.2.2.1).

(6) The QEDm Profile says the Provenance Resource created by the mXDE Data Element Extractor shall have [1..1] entity element which point to the document from which the data was extracted.

- The Data Element Extractor that supports both the MHD and XDS grouping may create one Provenance Resource that references both forms of access (via MHD, via XDS) or may create separate Provenance resources to reference the individual documents.

(6) During the Connectathon, we want you to execute mXDE tests using the Gazelle Proxy. That will simplify the process of collecting transaction data for monitor review.

mXDE Data Element Extractor actor:

Overall mXDE test workflow:

(1) Create one or more Device Resources in your server (to be referenced by Provenance Resources you will create).

(2) Import the required test patients or configure your system to reference the test Patient Resources on the FHIR Read/Write Server.

(3) Repeat this loop:

- The Extractor will host the test document(s) on your system (you may have received them via MHD or XDS).

- Your system will extract the relevant data and create FHIR Resources (Observation, and/or Medication, and or...) on your server.

- Your system will create a least one Provenance Resource for each of the FHIR Resources created.

- The Provenance Resource will reference at least one entity (MHD or XDS) and may reference both entities if supported.

- A software tool or Data Element Provenance Consumer will send FHIR search requests to your system. A set of queries that might be sent is included below. [Lynn: refer to the relevant *Search* test]

mXDE Data Element Provenance Consumer actor:

Overall mXDE test workflow:

(1) Configure your system with the endpoint of the Data Element Extractor partner.

(2) Repeat this loop for each data element supported (Observation, Medication, ...); some of the items might occur in a different order based on your software implementation:

- Send a search request of the form: GET [base]/[Resource-type]?_revinclude=Provenance:target&criteria

- Retrieve the source document or documents referenced in the Provenance Resource(s).

- IHE profiles usually do not defined how a consumer system makes use of clinical data. The mXDE Profile is no different. We will expect you to demonstrate some reasonable action with the FHIR resources that have been retrieved and the source document(s).

Evaluation

There are no result files to upload into Gazelle Test Management for this test. Understanding the testing approach in advance is intended to make testing during Connectathon week more efficient.

| Attachment | Size |

|---|---|

| 51.5 KB |

PMIR_Connectathon_Test_Patients

On this page:

Overview:

These instructions apply to the Patient Identity Registry actor in the Patient Master Identity Registry (PMIR) Profile. In this test, a PMIR Registry will load its database with Patient Resources formatted for [ITI-93] Mobile Patient Identity Feed, to support subscription tests that will occur later, during the Connectathon.

Background Information:

Read this section for background information about test patients used for PMIR testing at the Connectathon. Otherwise, for instructions for loading test patients, skip to the Instructions below.

In a normal deployment, a product is operating in an environment with a policy for patient identity creation and sharing that remains stable.

However, at the Connectathon, we test multiple profiles (for patient management: PIX, PDQ, PMIR... for document sharing: XDS, MHD...). Thus, the Connectathon provides special challenges when requirements for actors differ across IHE Profiles. Particularly relevant in PMIR and PIXm is the behavior of the server actor (the PMIR Patient Identity Registry & the PIXm Cross-Reference Manager).

A PIXm Patient Identifier Cross-Reference Manager:

Source of Patients in the PIXm Profile: The PIX Manager has many patient records, and a single patient (person) might have several records on the PIX Manager server that are cross-referenced because they apply to the same patient. The Patient Identity Feed [ITI-104] transaction in PIXm was introduced in PIXm Rev 3.0.2 in March 2022. The PIX Manager may also have other sources of patient information (eg HL7v2 or v3 Feed).

At the Connectathon, we ask PIX Managers to preload “Connectathon Patient Demographics” that are are provided via the Gazelle Patient Manager tool (in HL7v2, v3, or FHIR Patient Resource format). These Connectathon Patient Demographics contain four Patient records for each ‘patient’, each with identical demographics (name, address, DOB), but with a different Patient.identifier (with system values representing the IHERED, IHEGREEN, IHEBLUE, and IHEFACILITY assigning authority values). We expect that the PIX Manager will cross-reference these multiple records for a single patient since the demographics are the same.

QUERY: When a PIXm Consumer sends a PIXm Query [ITI-83] to the PIXm Manager with a sourceIdentifier representing the assigning authority and patient ID (e.g. urn:oid:1.3.6.1.4.1.21367.3000.1.6|IHEFACILITY-998), the PIXm Manager would respond with [0..*] targetId(s) which contain a Reference to a Patient Resource (one reference for each matching Patient Resource on the server).

At the Connectathon, if a PIXm Consumer queried by a Patient ID in the IHEFACILITY domain (above example), if there is a match, the PIXm Manager would return a response with three matches, one each for RED, GREEN, and BLUE, e.g.:

A PMIR Patient Identity Registry:

Source of Patients in the PMIR Profile: The PMIR Profile is intended for use in an environment where each patient has a single “Golden Patient record”. In PMIR, a patient has a single “Patient Master Identity” (a.k.a. Golden Patient record) that is comprised of identifying information, such as business identifiers, name, phone, gender, birth date, address, marital status, photo, contacts, preference for language, and links to other patient identities (e.g. a mother’s identity linked to a newborn).

The PMIR Patient Identity Source actor sends the Mobile Patient Identity Feed [ITI-93] transaction to the PMIR Patient Identity Registry to create, update, merge, and delete Patient Resources.

The PMIR Regsitry persists one "Patient identity" per patient. The PMIR Registry relies on the Patient Identity Source actor as the source of truth about new patient records (FHIR Patient Resources), and about updates/merges/deletes of existing patient records (i.e. the Registry does whatever the Source asks). The PMIR Registry does not have algorithms to 'smartly' cross-reference multiple/separate records for a patient. .

In the FHIR Patient Resource in PMIR, there are two attributes that hold identifiers for a patient:

- Patient.id - in response to a Mobile Patient Identity Feed [ITI-93] from a Source requesting to 'create' a new patient, the Patient Identity Registry assigns the value of Patient.id in the new Patient Resource. This value is *the* unique id for the patient's 'golden identity' in the domain.

- Patient.identifiers - the Patient Resource may contain [0-*] other identifiers for the patient, e.g. Patient ID(s) with assigning authority, a driver's license number, Social Security Number, etc. In the pre-defined Patient Resources used for some PMIR tests, we have defined a single assigning authority value. (urn:oid:1.3.6.1.4.1.21367.13.20.4000, aka the "IHEGOLD" domain). This distinguishes these patients from patients in the red/green/blue domains used to test other profiles.

At the Connectathon, because some systems support PIX/PIXv3/PIXm and PMIR, we provide separate Patients (in [ITI-93] format) for PMIR testing with identifiers in a single assigning authority - urn:oid:1.3.6.1.4.1.21367.13.20.4000 (aka IHEGOLD). PMIR Registry systems will preload these patients, and they are only used for PMIR tests. These patients have different demographics than the traditional red/green/blue Connectathon patients used for PIX, PDQ, XDS, and XCA.

QUERY: When a PMIR Patient Identifier Cross-Referece Consumer sends a PIXm Query [ITI-83] to the PMIR Registry with a sourceIdentifier representing the assigning authority and patient ID (e.g. urn:oid:1.3.6.1.4.1.21367.13.20.4000|IHEGOLD-555), if there is a match, the PMIR Registry would return a response with the one (matching) Patient Resource (the 'Golden' patient record).

At the Connectathon, if a Patient Identifier Cross-Reference Consumer send a PIXm Query by a Patient ID in the GOLD domain, if there is a match, the PMIR Registry would return a response with a reference to one Patient Resource.

In conclusion, using the RED/GREEN/BLUE Patients for PIX* testing, and the GOLD Patients for PMIR testing enables us to separate expected results that differ depending on whether a server is a PIX* Patient Identifier Cross-reference Manager or a PMIR Patient Identity Registry in a given test. We have managed testing expectations by using patients in different domains for testing the two profiles, but we don't tell you how you manage this in your product if you support both PMIR and PIX.

Instructions:

In this test, the PMIR Patient Identity Registry loads a set of FHIR Patient Resources used in PMIR peer-to-peer subscription tests. We use a set of FHIR Patient Resources for PMIR testing that are different than the Connectathon patients for testing the PIX* & PDQ* Profiles.

The patient test data is a Bundle formatted according to the requirements for a Mobile Patient Identity Feed [ITI-93] transaction.

The PMIR Patient Identity Manager should follow these steps to load the patient test data:

- Find the test patients in Github here: https://github.com/IHE/connectathon-artifacts/tree/main/profile_test_data/ITI/PMIR

- Download this file: 001_PMIR_Bundle_POST-AlfaBravoCharlie-GOLD.xml (we only provide the data in xml format)

That .xml file is a Bundle formatted according to the requirements for ITI-93. - POST that file to your PMIR Registry so that you are hosting the three Patient Resources within that file.

- Note that there are other files in that directory that you can access now, but do not POST them to your Registry until instructed to do so in the Subscription test during the Connectathon. It is important to wait because posting them during the Connectathon will be a trigger for a subscription.

Evaluation:

There is no log file associated with this 'test', so you do not need to upload results into Gazelle Test Management for this test.

- Log in to post comments

| Attachment | Size |

|---|---|

| 43.5 KB | |

| 335.28 KB | |

| 86.3 KB |

PLT_Preload_Codes

Introduction

To prepare for testing the ITI Patient Location Tracking (PLT) Profile, the PLT Supplier, Consumer and Supplier actors must use a common set of HL7 codes.

We ask these actors to load codes relevant to their system in advance of the Connectathon.

Instructions

The codes you need are identified in the peer-to-peer test that you will perform at the Connectathon.

1. In Gazelle Test Management, find the test "PLT_TRACKING_WORKFLOW" on your main Connectathon page.

2. Read the entire test to understand the test scenario.

3. Load the codes for PV1-11 Temporary Patient Location. These are in a table at the bottom of the Test Description section.

Note: These codes are are a subset of the HL7 codes used during Connectathon. If you already performed pre-Connectathon test "Preload_Codes_for_HL7_and_DICOM", then you already have these codes.

Evaluation

There is no evaluation for this 'test'. If you do not load the codes you need on your test system prior to the Connectathon, you may find yourself wasting valuable time on the first day of Connectathon syncing your codes with those of your test partners.

Preload PDO: Load Patients for Pediatric Demographics option

Introduction

These instructions apply to actors that support the Pediatric Demographics option. This test asks actors to load their database with patients for "twin" use cases.

Instructions

Actors that support the Pediatric Demographics Option must run this 'test' to ensure that test patients with proper pediatric demographics are in its system in order to run subsequent tests on the Pediatric Demographics option.

- PIX/PIXv3 Patient Identity Source systems - load these patients into your database. At the Connectathon, you will perform peer-to-peer tests, you will be asked to send them to a PIX Manager.

- PDQ/PDQv3/PDQm Patient Demographics Supplier systems - load these patients into your database. At the Connectathon, you will perform peer-to-peer tests, you will be asked to respond to queries for these patients.

The Pediatric Demographics test patients are here: http://gazelle.ihe.net/files/Connectathon_TwinUseCases.xls

We ask you to manually load these patients. Unfortunately, we cannot use the Gazelle Patient Manager tool to load these patients because the 'special' pediatric fields are not supported by the tool.

Evaluation

There is no log file associated with this 'test', so you do not need to upload results into Gazelle Test Management for this test.

- Log in to post comments

| Attachment | Size |

|---|---|

| 43.5 KB |

RFD_Read_This_First

This “Read This First” test helps to prepare you to test RFD-based profiles at an IHE Connectathon.

A Pre-Connectathon Task for Form Managers & Form Processors

Documenting RFD_Form_Identifiers: This is a documentation task. We request all Form Managers and Form Processors to help their Form Filler test partners by documenting the Form Identifiers (formID) that the Form Fillers will use during Connectathon testing. Follow the instructions in preparatory test RFD_formIDs

Overview of RFD Connectathon Testing

RFD and its 'sister' profiles:

The number of IHE profiles based on RFD has grown over the years. These 'sister' profiles re-use actors (Form Filler, Receiver, Manager, Processor, Archiver) and transactions (ITI-34, -35, -36) from the base RFD profile.

Starting at 2016 Connectathons, to reduce redundant testing, we have removed peer-to-peer tests for RFD only. If you successfully complete testing for an actor in a 'sister' profile, you will automatically get a 'Pass' for the same actor in the baseline RFD profile. For example, if you "Pass" as a Form Filler in CRD, you will get a "Pass" for a Form Filler in RFD for 'free' (no additional tests).

Similar test across profiles:

Baseline Triangle and Integrated tests: These RFD tests exercise the basic RFD functions Retrieve Form and Submit Form.

"Triangle" and "Integrated" refer to the combinations of actors in the tests. A Triangle test uses a Form Filler, Form Manager and Form Receiver (triangle). An Integrated test refers to the Form Processor that is an integrated system (supports both ITI-34 and ITI-35); the Integrated test uses a Form Filler and a Form Processor.

CDA Document Tests: We have tried to be more thorough in our definitions of tests for CDA documents; we still have some work to do. There are “Create_Document” tests that ask actors that create CDA documents to produce a document and submit that document for scrutiny/validation by a monitor. There are test sequences that need those documents for pre-population or as an end product; you cannot run those tests until you have successfully completed the “Create_Document” test. We have modified the test instructions for the sequence tests that use CDA documents to require the creator to document which “Create_Document” test was used. We do not want to run the sequence tests before we know we have good CDA documents.

Archiving Forms: We have a single test -- "RFD-based_Profiles_Archive_Form" to test Form Archivers and Form Fillers that support the 'archive' option. There are separate tests for archiving source documents.

Testing of options: IHE does not report Connectathon test results for Options in IHE profiles. We readily admit that the tests that cover options will vary by domain and integration profile. If you read the tests in domains other than QRPH (or even in QRPH), you may find profiles that have no tests for named options. We continue to try to enhance tests for named options and other combinations of parameters found in the QRPH profiles.