Quality Manual

This is the Quality Manual and System for KEREVAL Health Lab, in the IHE context.

General framework is given in "Quality Manual for KEREVAL Health Lab", available on simple request to the Lab manager or to the Quality Manager.

Scope

Publishing rules

Organization of the laboratory

Applicable processes

- Test tools Development and Test Process

- Test Session Process

- Staff Qualification

- Project Management

- Quality management

Annexes

Detailed scope

Ref : KER3-NOT-HEALTHLAB-SCOPE-20131107-EPU

Scope of the accreditation

Standards

- ANSI/HL7 CDA R2-2005 (ISO/HL7 27932:2009)

- ANSI/HL7 V2.x

- OASIS

- CEN/ISO EN 13606

- ISO-TS 15000

IHE Profiles

- XDS.b (Cross-Enterprise Document Sharing)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 10 - Cross-Enterprise Document Sharing (XDS.b)

- XDS-MS (Cross-Enterprise Document Sharing - Medical Summaries)

- http://ihe.net/uploadedFiles/Documents/PCC/IHE_PCC_TF_Vol1.pdf, Section 3 - Cross-Enterprise Sharing of Medical Summaries (XDS-MS)

- XDS-SD (Cross-Enterprise Sharing of Scanned Document)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 20 - Cross-Enterprise Sharing of Scanned Document content integration profile

- ATNA (Audit Trail and Node Authentication)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 9 - Audit Trail and Node Authentication (ATNA)

- CT (Consistent Time)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 7 - Consistent Time (CT)

- PIX (Patient Identity Cross-Referencing)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 5 - Patient Identity Cross-Referencing (PIX)

- PDQ (Patient Demographics Query)

- http://ihe.net/uploadedFiles/Documents/ITI/IHE_ITI_TF_Vol1.pdf, Section 8 - Patient Demographics Query (PDQ)

Normative methods

eHealth Conformance Interoperability Assessment Scheme

- HITCH (Healthcare Interoperability Testing and Conformance Harmonisation)

Effective date : 11/07/2013

Approved by Eric POISEAU

Publishing workflow

- Edit page

- The contributor must log on for the "Edit" function pages.

- Once editing is complete, the status of the page is changed from "Draft" to "To be reviewed" by the author.

- In case of modification of a page already approved, the contributor is allowed to keep the status approved, in case of minor modification (e.g.: format) but he/she must make the approver aware of the modification.

- Validation

- The author asks for a validation from the Health Lab Manager or from the quality manager, depending in the type of subjects.

- The manager can thus change the status : "to be reviewed", "rejected", "approved" or "deprecated".

- Disseminate the publication

- Once a publication is made, the contributor must send an email to make the team aware of the availability of new information on ihe@kereval.com. It is also possible for readers to subscribe to the Drupal site based on RSS feeds.

- Traceability

- Traceability of changes made page per page is only visible for the team KEREVAL and administrator.

The information is drawn through the "Track" module for any changes, while change status follow-up is drawn through the "Workflow" module.

Actors and Roles

Actors & Roles

General roles

| Role | Definition |

|---|---|

| Top Level Management |

Top Level Management coordinates the different activities. It gets reports from QA Manager, Lab and test session Managers & Auditors. |

| Lab Manager |

The KEREVAL Health Lab Manager managesthe activities ofKEREVAL e-Health laboratoryand maintains thetechnical expertiseof the laboratory. A substitute is identified for this role. |

| QA Committee |

A committee which role is to ensure the quality of the testing process, discusses the needs and decides on what needs to be done in term of quality |

| QA Manager |

Manages the QA. Gets input from the QA Committee and reports to Top Level Management |

| Auditors |

The role of independant auditors is to verify that the QMS processes and testing references are correctly used and/or respected. |

Test Session

| Role | Definition |

|---|---|

| Testing Session Manager |

Manages the testing session. |

| Monitors |

Verifies and validates the tests performed by the Systems in accordance whith the test cases described in the Gazelle Management tool. Monitors can be either external or internal resources, depending on type of test sessions. In any case, they must be qualified. |

| SUT Operators |

SUT Operators execute on their SUT test steps required by the test. SUT operators are by definition external resources, belonging to Health companies. |

Test Tools Development

| Role | Definition |

|---|---|

| Test Tools Development Manager |

Manages the development team. |

| Test Tools Developement Team |

They should follow the processes set up for the development of the test tools and should develop the test tools taking into account the state of art in this field. They are also Testers. |

| Testers |

Technically skilled professional who is involved in the testing of KEREVAL Health Lab test tools. According to the level of testing, testers can be either developers, or dedicated testers within KEREVAL or SUT operators for Beta testing (involve testing by vendors or any SUT operators who use the system at their own locations and provide feedback to Test Tool Developers). |

Laboratory chart

Organization of KEREVAL Health Lab

Test Tools Development and Test Processes

Test Tools interactions

Test Tools Lifecycle

Development & Validation

Objectives

- Manage evolution and maintenance of KEREVAL HEALTH Lab platform

Validation tools

- XML Based Validators development and validation process

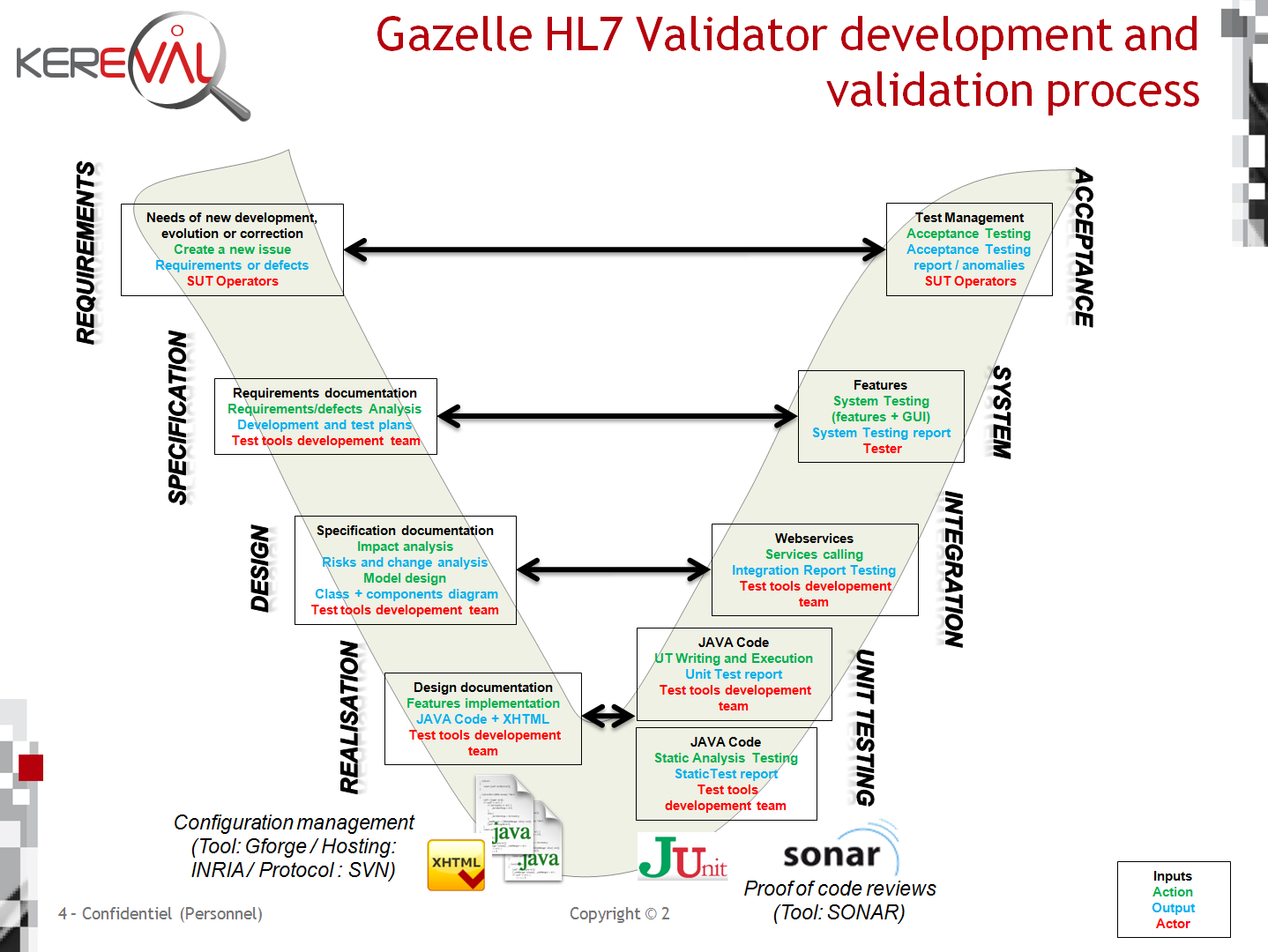

- Gazelle HL7 Validator development and validation process

Simulation tools

Test management tool

Support Tools

Tool release management

Gap Analysis

A watch is kept on the evolution of our references.

When a reference evolves, impact analysis is performed by the Lab Manager to determine possible changes.

Following this analysis, one (or more) ticket (s) is created in JIRA to take these changes into account.

These changes are then included in the traditional development process of testing tools.

Test Strategy applied to test tools

- Introduction

The purpose of this section is to present the test strategy and KEREVAL Health test Lab approach.

This section takes into account the comprehensive approach to Project Management in the IHE group and applies to any project portfolio IHE.

Test strategy describes in an organization all the activities and methods of conducting activities verification and validation in a project to ensure:

- The objectives addressed by the project

- Lack of critical regression system

- Compliance with the specifications

- Compliance with the quality

- Robustness of the system

It defines the test phases, tasks and deliverables, roles and responsibilities of different stakeholders, manage the coordination of actors and the sequence of tasks.

ISTQB advocates that it is not possible to test everything, and test strategy is based on the analysis of risks and priorities to focus testing efforts.

01 - Test Tools Lifecycle

02 - Development & Validation

01-XML Based Validators Development and Validation Process

01-Requirements

Requirements Process

Objectives

- To identify new validator from standardization bodies

Pre-requisites

- N/A

Inputs

- Needs of the new validator

- Requirements concerned by the validator

Actions

- Writing needs in specification document or an accessible documentation to be analysed by development team

Outputs

- Specification Documents

- Normative XSD

- Formal requirements

02-Specification

Specification Process

Objectives

- Identify and analyse impact from requirements process outputs

- Identify functionnality and release

- Identify tool impact

Pre-requisites

- Requirements are identified by bodies standardization

Inputs

- Specification Documents

- Normative XSD

Actions

- Analysis specifications documents

- Analysis normative XSD

- Extract requirements of documentation

Outputs

- XML Format Document with requirements to manage linking documentation with test

03-Design

Design Process

Objectives

- Design receive specifications

Pre-requisites

- Specfication Documents are delivered and analysed

Inputs

- XML Format analysed from Specification Process

- Formalized requirements

Actions

- Processing XSD -> UML

- Convert the normative XSD document in a UML model

- OCL Injection

- Include constraints in the model to assure the validation

Outputs

- UML Model

- UML Model + OCL

04-Realisation

Realisation Process

Objectives

- Develop new or existing validator module respecting normatives

Pre-requisites

- Specfication Documents are translated in UML Model with OCL from normatives

Inputs

- UML Model with OCL implemented from Design Process

Actions

- Generating JAVA code from UML Model

- Create unit test from each function/constraint

- Generate documentation of the module

Outputs

- JAVA code

- Unit test JAVA code

- JAVA code documentation of module

05-Test

Test Process

Objectives

- Verify if module respect all rules and specifications

Pre-requisites

- JAVA code is implemented

Inputs

- JAVA Code

- Validator webservice

- Samples documents

Actions

- Unit Test

- Static analysis

- UT Generation and verification

- Integration Test

- Services modules call

- System Test

- System testing relative Specifications documents

- Acceptance Test

- Beta testing by vendors relative their needs

Outputs

- Test report

02-Gazelle HL7 Validator development and validation process

01-Requirements

Requirements Process

Objectives

- Need to formalize a new development, evolution or correction of HL7 validator.

- Describe the features expected in the form of use cases or defect

Pre-requisites

- N/A

Inputs

- Need a new feature, a change or correction

Actions

- Writing needs in an issue

Outputs

- Requirements / Defects

02-Specification

Specification Process

Objectives

- Identify and analyse impact from requirements process outputs

- Identify features and releases

- Identify tool impacts

Pre-requisites

- Requirements are identified and created

Inputs

- Issue which content the need

Actions

- Analysis the issue to identity existing tools that can be used, and, in the contrary, new developments to be performed

- Create a list of features to be offered by HL7 Validator

- Specify the graphical user interface

Outputs

- Gap analysis (gathers the support tools which required but missing and required by Test Management)

- Features list

- Mock-up of GUI

- Development and Test plans

03-Design

Design Process

Objectives

- Design and create specification

Pre-requisites

- The need expression is available and formal

Inputs

- Existing specification and design of module

- Existing specification and design of tool

- Existing platform architecture and design

- Change requests (issue)

- Management of documentation process

Actions

- Design the modules hierarchy, that means, will HL7 validator inherit from existing Gazelle modules, which are the dependencies with other modules/third-party libraries, which are the new modules to be developed and that it would be convenient to keep as much as independant as possible for possible reuse.

- Design the HL7 validator model: which are the needed entities, how they are linked together.

- Analyse and change state of changement requests

- Analyse impact on other module other than those specify on the change request

Outputs

- Interactions diagram

- Class and components diagrams

- Specification and design of module

- Specification and design of tool

- HL7 validator architecture and design

- Document index up to date

04-Realisation

Realisation Process

Objectives

- Develop new HL7 validator features respecting normatives

Pre-requisites

- Specfication documents are validated

Inputs

- Tool specifications or module specifications

- Tool design document or module design document

- Coding rules

- Development repository

Actions

- Creation of new featuers or module, apply the KEREVAL development process available on SMQ

Outputs

- JAVA code + XHTML

- JAVA code documentation of module

- New branch on development repository

05-Test

Test Process

Objectives

- Verify if new features respect all rules and specifications

Pre-requisites

- JAVA and XHTML code is compiled

Inputs

- JAVA/XHTML Code

- Branch of development on IHE SVN

- Validation strategy

- Validation objectives

Actions

- Unit Test

- Static analysis

- Unit testing on critical methods

- Integration Test

- Verify services calling (webservices)

- System Test

- System testing against Specifications documents (features & GUI)

- Acceptance Test

- Beta testing by vendors according to their needs

Outputs

- Test report with all part of test levels

03-Simulators development and validation process

01-Requirements

Requirements Process

Objectives

- To identify new integration profiles/actors to be emulated from standardization bodies

Pre-requisites

- N/A

Inputs

- Needs of a new tool for testing an integration profile/actor implementation

- Requirements from the technical framework concerning the chosen integration profile

Actions

- Writing needs in specification document or an accessible documentation to be analysed by development team

Outputs

- Technical Framework

- Formal requirements

02-Specification

Specification Process

Objectives

- Identify and analyse impact from requirements process outputs

- Identify features and releases

- Identify tool impacts

Pre-requisites

- Requirements are identified by bodies standardization

Inputs

- Requirements Documents

- Technical Framework

Actions

- Analysis requirements documentation/technical framework to identity existing tools that can be used, and, in the contrary, new developments to be performed

- Create a lis of features to be offered by the new simulator

- Specify the graphical user interface

Outputs

- Gap analysis (gathers the support tools which required but missing and required by the new simulator)

- Features list

- Mock-up of GUI

03-Design

Design Process

Objectives

- Design receive specifications

Pre-requisites

- Specfication Documents are delivered and analysed

Inputs

- Formalized requirements / technical framework

Actions

- Design how the new simulator will be integrated within Gazelle platform (new application, integrated in an existing application) and how it will communicate with some of the others tools of the platform

- Design the modules hierarchy, that means, will new simulator inherit from existing Gazelle modules, which are the dependencies with other modules/third-party libraries, which are the new modules to be developed and that it would be convenient to keep as much as independant as possible for possible reuse.

- Design the simulator model: which are the needed entities, how they are linked together, CRUD schema...

Outputs

- Interactions diagram

- Class and components diagrams

04-Realisation

Realisation Process

Objectives

- Develop new or existing simulator module respecting normatives

Pre-requisites

- Specfication documents are validated

Inputs

- All specification documentation

Actions

- Develop simulator features

Outputs

- JAVA code + XHTML

- JAVA code documentation of module

05-Test

Test Process

Objectives

- Verify if module respect all rules and specifications

Pre-requisites

- JAVA and XHTML code is produced

Inputs

- JAVA/XHTML Code

- Simulator webservice

- Message validated with suitable validator

Actions

- Unit Test

- Static analysis

- Integration Test

- Message exchange (allows to test the IHE interface and the correct integration of the message in the database if required)

- System Test

- System testing against Specifications documents (features & GUI)

- Acceptance Test

- Beta testing by vendors according to their needs

Outputs

- Test report with all part of test levels

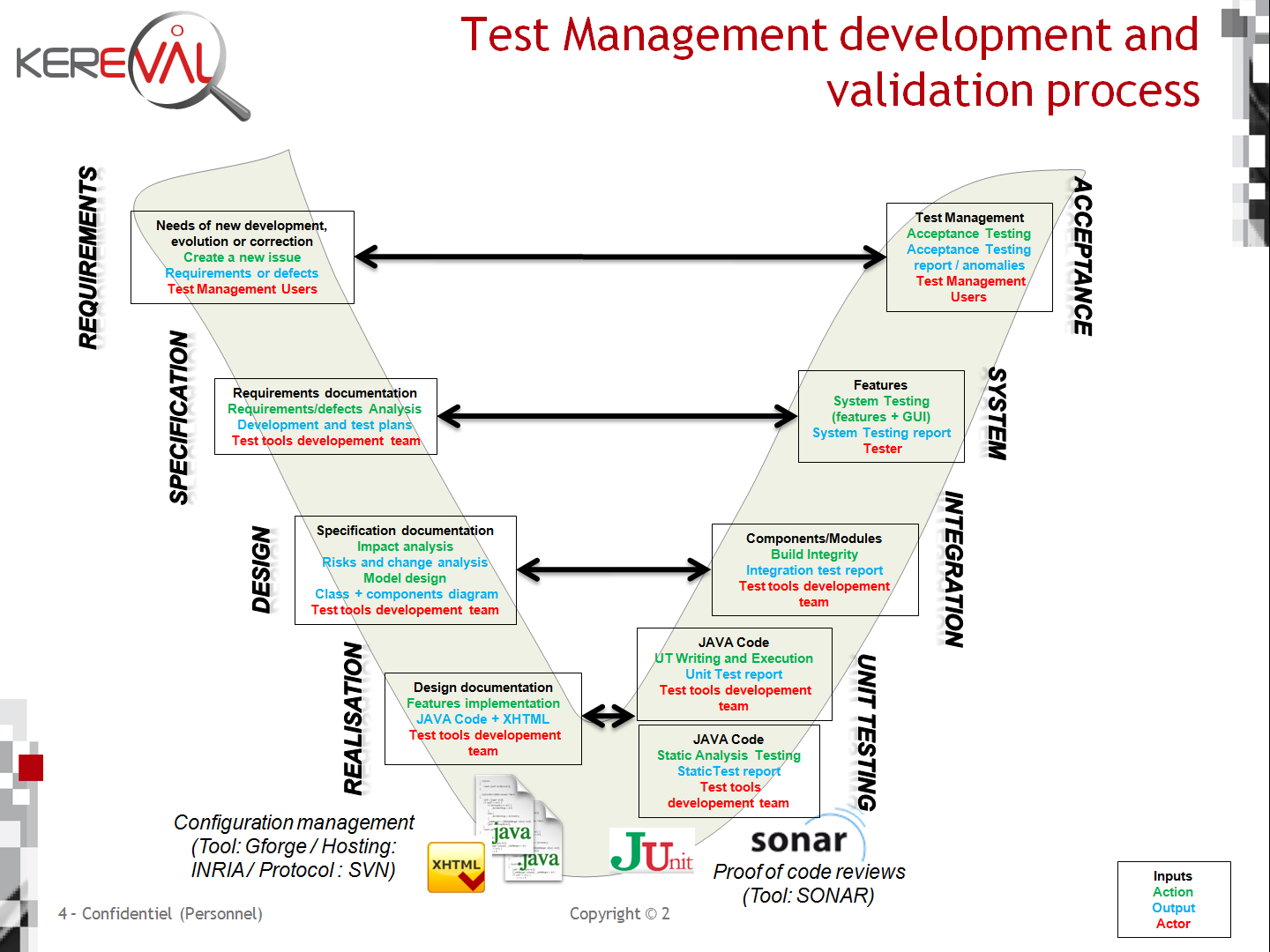

04-Test Management development and validation process

01-Requirements

Requirements Process

Objectives

- Need to formalize a new development, evolution or correction of the platform.

- Describe the features expected in the form of use cases or defect

Pre-requisites

- N/A

Inputs

- Need a new feature, a change or correction

Actions

- Writing needs in an issue

Outputs

- Requirements / Defects

02-Specification

Specification Process

Objectives

- Identify and analyse impact from requirements process outputs

- Identify features and releases

- Identify tool impacts

Pre-requisites

- Requirements are identified and created

Inputs

- Issue which contains the need

Actions

- Analyse the issue, identitify existing tools that could be used. If there is not existing tools identify the developments to be performed

- Create a list of features to be offered by Test Management

- Specify the graphical user interface

Outputs

- Gap analysis (gathers the support tools which required but missing and required by Test Management)

- Features list

- Mock-up of GUI

- Development and Test plans

03-Design

Design Process

Objectives

- Design and create specification

Pre-requisites

- A formal expression of the needs is available

Inputs

- Existing specification and design of module

- Existing specification and design of tool

- Existing platform architecture and design

- Change requests (issue)

- Management of documentation process

Actions

- Design the modules hierarchy, that means, will Test Management inherit from existing Gazelle modules, which are the dependencies with other modules/third-party libraries, which are the new modules to be developed and that it would be convenient to keep as much as independant as possible for possible reuse.

- Design the Test Management model: which are the needed entities, how they are linked together.

- Analyse and change state of changement requests

- Analyse impact on other module other than those specify on the change request

Outputs

- Interactions diagram

- Class and components diagrams

- Specification and design of module

- Specification and design of tool

- Platform architecture and design

- Document index up to date

04-Realisation

Realisation Process

Objectives

- Develop new Test Mangement features respecting normatives

Pre-requisites

- Specfication documents are validated

Inputs

- Tool specifications or module specifications

- Tool design document or module design document

- Coding rules

- Development repository

Actions

- Creation of new featuers or module, apply the KEREVAL development process available on SMQ

Outputs

- JAVA code + XHTML

- JAVA code documentation of module

- New branch on development repository

05-Test

Test Process

Objectives

- Verify if new features respect all rules and specifications

Pre-requisites

- JAVA and XHTML code is compiled

Inputs

- JAVA/XHTML Code

- Branch of development on IHE SVN

- Validation strategy

- Validation objectives

Actions

- Unit Test

- Static analysis

- Unit testing on critical methods

- Integration Test

- Verify build integrity (components/modules)

- System Test

- System testing against Specifications documents (features & GUI)

- Acceptance Test

- Beta testing according to the expressed needs

Outputs

- Test report with all part of test levels

05-Platform Delivery Process

Release

Objectives

- Release of the Test Tools

- Make a release note

- Tag the version

Pre-requisites

- Test Tool is implemented and tested

Inputs

- Validation report of the Test Tools

- Release sheet (Issues in JIRA)

- Test Tools in SVN validated

Actions

- Analyse the state of all change request in JIRA

- Analyse the state of validation in the validation report

- Close all change request

- Deliver the test tool

- Update tool index

Outputs

- Release Note

- Test Tools release

Plateform Documentation

Objectives

- Maintain up to date documentation of the Test Tools for technical and marketing issues

- Capitalization of Test Tools documents (user guides, presentations)

Pre-requisites

- N/A

Inputs

- Addition to major Test Tools features (e.g. coverage)

- IHE presentations related to major events (e.g. customer demonstration, IHE presentation)

Actions

- Store source files of document on network folder (Drupal)

Outputs

- Any document to help to understand or to promote the Test Tools

06-Gazelle Proxy

Project overview

See Gazelle Proxy Informations.

Development

Proxy development is a V cycle similar at all other Test Tools projects. It is not critical to test result and his development process is minor priority.

Validation

Proxy validation is included in the global test strategy relative to its interactions with other Test Tools.

07-EVS Client

Project overview

Development

EVS Client development is a V cycle similar at all other Test Tools projects. It is not critical to test result and his development process is minor priority.

Validation

EVS Client validation is included in the global test strategy relative to its interactions with other Test Tools.

03 - Test Strategy applied to test tools

Preparation of the test strategy

- 1 what is tested

2 when we test

3 why we test

Elements to be tested

| Tool: | Test Management | ||

| Type: | Gazelle Test Bed | ||

| Description: | TM is the application used to manage the connectathon from registration process to the generation of the test report | ||

| Tool: | Proxy | ||

| Type: | Support Tool | ||

| Description: | "Man in the middle": capture the messages exchanged between two systems and forwards them to the validation service front-end | ||

| Tool: | Gazelle HL7 Validator | ||

| Type: | Validation Service | ||

| Description: | Offers web services to validate HL7v2.x and HL7v3 messages exchanged in the context of IHE | ||

| Tool: | CDA Validator | ||

| Type: | Validation Service | ||

| Description: | Embedded in CDA Generator tool, it offers a web service to validate a large set of different kinds of CDA using a model-based architecture | ||

| Tool: | Schematron-Based Validator | ||

| Type: | Validation Service | ||

| Description: | Offers a web service to validate XML documents against schematrons | ||

| Tool: | External Validation Service Front-end | ||

| Type: | Validation Service | ||

| Description: | The EVSClient is a front-end which allows the user to use the external validation services from a user-friendly interface instead of the raw web service offered by the Gazelle tools. | ||

| Tool: | XD* Client | ||

| Type: | Simulator | ||

| Description: |

Emulates the initiating actors of the XD* profiles (XDS.b, XCPD, XDR, XCA, DSUB ...), and validate XDS metadatas using a model based validation strategy |

||

| Tool: | TLS Simulator | ||

| Type: | Simulator | ||

| Description: | Uses to test the TLS-based transactions for various protocols | ||

Testing effort

The test effort will be prioritized as follows:

- Major release

- Level changes for backwards incompatible API changes, such as changes that will break user interface

- The test effort is prioritized in order to test all levels of tests "Must" and "Should" (a test report is mandatory)

- Minor release

- Level changes for any backwards compatible API changes, such as new functionality/features

- The test effort is prioritized in order to test all levels of tests "Must" (a test report is not mandatory bur recommended)

- Bug fixes

- Level changes for implementation level detail changes, such as small bug fixes

- The test effort is considered by Test Tools Development Team (a test report is not mandatory)

Criticality/Risks to test

A risks analysis was realized to determine project, product and lab risks. It is confidential to preserve the test strategy of Test Tools.

It permisses to evaluate critical parts of Test Tools to test.

- Must - Must have this requirement to meet the business needs.

- Should - Should have this requirement if possible, but project success does not rely on it.

- Could - Could have this requirement if it does not affect anything else in the project.

- Would - Would like to have this requirement later, but it won't be delivered this time.

"from the MoSCoW Method"

Development of the strategy

- 4 which tests are performed

5 how it is tested

6 who tests

Test Tools types and levels of testing

| Test Management | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Should | Must | Must |

| No-functional testing | Could | Must | Must |

| Structural testing | Would | N/A | N/A |

| Tests related to change | Should | Should | Should |

| Proxy | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Must | Must | Must |

| No-functional testing | Could | Should | Could |

| Structural testing | N/A | N/A | N/A |

| Tests related to change | Should | Should | Should |

| HL7 Validator | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Would | Must | Must |

| No-functional testing | N/A | Would | Would |

| Structural testing | N/A | Would | Would |

| Tests related to change | Could | Must | Must |

| CDA Generator | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Must | Must | Should |

| No-functional testing | Must | Should | N/A |

| Structural testing | N/A | N/A | N/A |

| Tests related to change | Would | Should | Would |

| Schematron Validator | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Should | Should | Should |

| No-functional testing | Should | Should | N/A |

| Structural testing | N/A | N/A | N/A |

| Tests related to change | Would | Should | Would |

| EVS Client | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Would | Must | Must |

| No-functional testing | N/A | Would | Would |

| Structural testing | N/A | N/A | N/A |

| Tests related to change | Would | Must | Would |

| XD* Client | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Must | Must | Should |

| No-functional testing | Must | Should | N/A |

| Structural testing | N/A | N/A | N/A |

| Tests related to change | Would | Should | Would |

| TLS Simulator | Tests levels | ||

| Test types | Unit testing | Integration testing | System testing |

| Functional testing | Should | Should | Could |

| No-functional testing | Would | Would | Could |

| Structural testing | Would | Would | Would |

| Tests related to change | Should | Should | Should |

Test Tools functional requirements (system testing level)

Each test tool has its features detailed in a requirements management tool (testlink). A set of high level requirements provides an overall view of the tool and the tests that needs be performed.

| Test Management | High level requirements | ||

| Application Management | |||

| Prepare test session | |||

| Execute test session | |||

| Report test session | |||

| Gazelle Proxy | High level requirements | ||

| Proxy Core functions | |||

| Proxy interfacing | |||

| HL7 Validator | High level requirements | ||

| HL7 Message Profile Management | |||

| HL7 Resources Management | |||

| HL7v2.x validation service | |||

| HL7v3 validation service | |||

| CDA Generator | High level requirements | ||

| CDA Validation Service | |||

| Documentation Management | |||

| Schematron Validator | High level requirements | ||

| Schematron validation service | |||

| Schematrons Management | |||

| EVS Client | High level requirements | ||

| Clinical Document Architecture - Model Based Validation | |||

| Clinical Document Architecture - Schématrons | |||

| Cross Enterprise Document Sharing - Model Based Validation | |||

| Transverse - Constraints | |||

| Functional requirements | |||

| XDStar Client | High level requirements | ||

| XDS Metadatas validation service | |||

| XDS Documentation management | |||

| TLS Simulator | High level requirements | ||

| Not logged in users | |||

| Logged in users | |||

Organization

Cutting in testing / campaigns

Two campaigns of system tests are planned in the project:

- Campaign system testing including functional testing to achieve the different objectives of the above tests

- Campaign of retest (verification of the correction of anomalies) and regression

Moreover, when a release is planned, a testing day could be prepared to test the platform globally.

Unit and integrating tests are managed during Test Tools Development cycle, and results are analysed during the campaigns to implemented a Test Tools test report.

Then, security audit of the platform premises to assure the using of the platform, it is renewed once a year.

Tests criticality

Levels and types of tests defined in the "Test Tools types and levels of testing" of "Development of the strategy" part allow actors to test quickly and easily organize the testing tools.The unit and integration level, all typed tests "Must" should be held. Will be played typed "Should" tests according to the time allotted for testing during development.

On the system level, campaigns specified below will be organized according to the delivery of tools provided with consideration of typed tests "Must" and "Should" in order of priority.

The Test Tools Development Manager is in charge of requiring and/or organizing tests campaigns, according to the targeted delivery.

Day type testing (example of test day before US CAT delivery)

Roles and responsabilities

Unit & Integrating testing (Test Tools Development Team)

- Test platform administrator

- Unit test management

- Provision of datasets

System Testing (Testers)

- Test plan writing

- Testing balance writing

- Monitoring the implementation of system tests

- Test design

- Datasets design and manage

- Test execution

- Bug report management

Acceptance Testing (Testers as Users)

- Beta testing in real conditions of use

- Bug or suggestions report

Test environment

Access to the application under test (URLs located on the web)

- Test Management : http://gazelle.ihe.net/EU-CAT/home.seam

- Gazelle Proxy : http://gazelle.ihe.net/proxy/home.seam

- HL7 Validator : http://gazelle.ihe.net/GazelleHL7Validator/home.seam

- CDA Generator : http://gazelle.ihe.net/CDAGenerator/home.seam

- Schematron Validator : http://gazelle.ihe.net/SchematronValidator/home.seam

- EVS Client : http://gazelle.ihe.net/EVSClient/

- XDStar Client : http://gazelle.ihe.net/XDStarClient/home.seam

- TLS Simulator : http://gazelle.ihe.net/tls/home.seam

Each Test Tool is available within the development environment of developers for unit testing.

Datasets tests

Datasets are managed before a test campaign, relative to the need.

Calibration

Anyway on some test tools (TM and EVS excluded), reference dataset are specifically managed to calibrate the test tools.

Their purpose is to check that test result provided by the test tools are still valid, even after a change on the tool.

Software Test Management

The test management platform is integrated with TMS TestLink in specifics projects relative to the Test Tools. It is located on http://gazelle.ihe.net/testlink/login.php.

Software bug management

The software bug managemer is JIRA, it is located on http://gazelle.ihe.net/jira/secure/Dashboard.jspa . From the observation of a failure in the application, a bug report is written in Jira.

Testing supplies

| To forward | To archive | |

| Documentation | ||

| Test plan | X | X |

| Test folder | X | |

| Test report | X | X |

| Bug report | X | X |

| Data | ||

| Datasets tests | X | |

| Basic input data | X | |

| Basic output data | X | |

| Records + trace | X | X |

Test Session Processes

Test Session Management

Objectives

- Prepare Test Session in order to be successful

Processes

Tools

- The Gazelle Test Management tool provides the user with the ability to define a jasper report to generate a contract for the participation to the testing session. The contract is then generated based on the content of the template, and can be customized accordingly (link to sample generated contract)

01 - Preparation

Registration of Participants

Objectives

- Organise participants registration and communication for the Test Session

Pre-requisites

- A new testing session is configured and opened by the Test Session Manager

In case of Accredited Testing, the involved SUT must have passed the targeted tests at an IHE connectathon.

In case of Accredited Testing, the involved SUT must have passed the targeted tests at an IHE connectathon.

Inputs

- All users who want to participate at the Test Session

- Set of validated test cases to be used for the Test Session

Actions

- SUT Operators :

- Must register hisself and his company

- Register their system(s)

![]() In case of Accredited Testing, the system version is not allowed to change, at any time all along the test session.

In case of Accredited Testing, the system version is not allowed to change, at any time all along the test session.

- Generate contracts

- Manage their preferences

- Test Session Manager :

- Accepts all users registration (SUT Operators)

- Accepts systems to session

- Creates accounts for monitors

- Monitors

- Log into the application

Outputs

- Participants registered list

- Systems registered list

- Contracts generated

- Monitors list

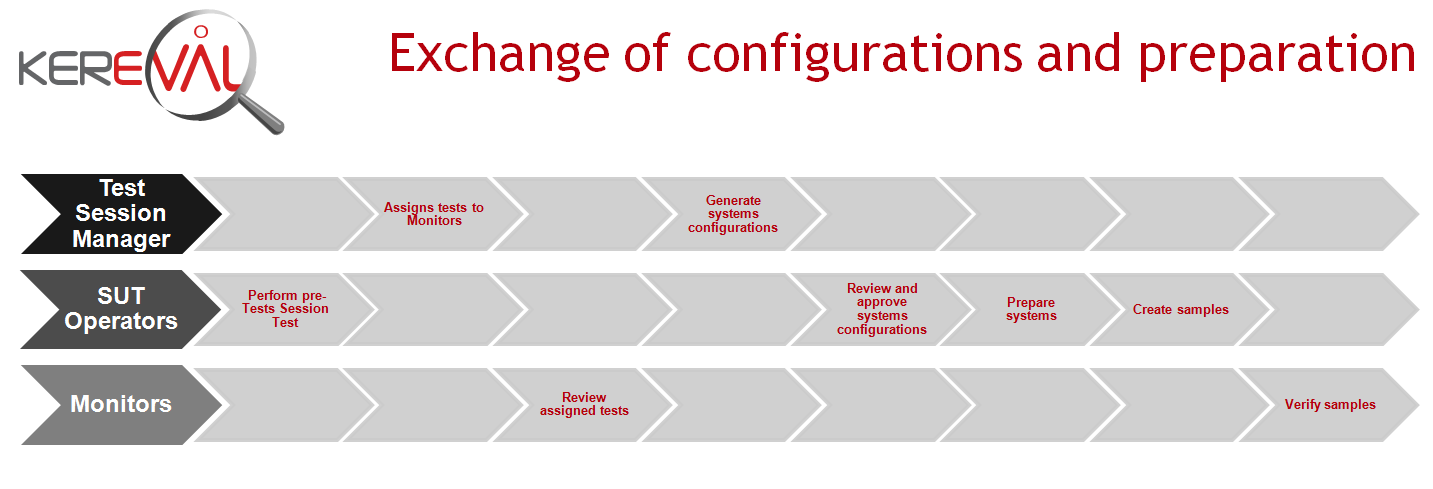

Exchange of configuration and preparation

Objectives

- Provide configuration information about systems

- Prepare Test Session

Pre-requisites

- A new testing session is configured and opened by the Test Session Manager

- All participants and their systems are registered for the Test Session

Inputs

- N/A

Actions

- SUT Operators :

- Perform pre-Test Session tests

- Review and approve configurations

- Prepare systems (pre-load test input/network configuration/peer configuration)

- Create and consume samples

- Test Session Manager :

- Assign test to monitors

- Generate systems configurations

- Monitors

- Review assigned tests

- Verify samples

Outputs

- Test Session planning

- Approval by Test Session Manager of the readiness to start the session (based on the test session planning dashboard)

Flowchart

02 - Execution

Objectives

- Performed tests for registered systems

Pre-requisites

- Test Session is configured and opened

- Participants are registered

- Systems are registered

- Systems configurations are ready to exchange informations

- Tests are ready, validated and assigned

Inputs

- Key Performance Indicators that allow following the progress of the test execution

- Reporting elements that need to be provided in the test session report

- Satisfaction questionnaire for participants are prepared

Actions

- Test Session Manager

- Sends Kick-off mail (see template)

- Consults KPI

- Turns critical status to on

- Grades systems

- It is possible to add some test case to the test plan, even if they are not planned at the beginning.

- SUT Operators

- Run assigned tests

- Store any proof of test execution (logs, trace...)

- Change tests status

- Complete the satisfaction questionnaire

- Monitors

- Verify assigned tests

- Specific attention must be paid at the moment a test result seems to be false : monitor checks environment first after concluding if the result is false or not

- in case of Test steps are not performed (eg : NIST tools not available), monitor mentions it and adds a comment on the reason why it is not impacting the test result.

- Complete the satisfaction questionnaire

Outputs

- Satisfaction questionnaires, see for example the Istanbul survey

- Set of test instances performed during the Test Session execution (includes Monitors and SUT operators comments)

Tools

Flowchart

Kick-off mail template

CONTEXT (SUT Description)

- List of organizations / SUTs / SUT Operators :

- On Gazelle Test Management : in "Connectathon" -> "Find systems" to view organizations, systems and SUT Operators concerned by the Test Session.

COVERED BY 17025 ACCREDITATION ?

- YES / NO

TEST SESSION IDENTIFICATION

- Test session name :

- If on line session :

- Gotomeeting :

- Risks :

- Begin :

- End :

- Test Session Manager :

- Monitors :

- On Gazelle Test Management : in "Connectathon" -> "Find a monitor" to see all monitors concerned by the Test Session

- Quality controller :

TEST PERIMETER

- Tests to run :

- Tests to run are provided by the Gazelle Test Management Tool

- Test not to run :

- Configurations :

- Number of configuration :

- Configuration identification :

ENVIRONMENT

- Gazelle Platform :

- Version :

- Other Test Tools :

- [TEST TOOL 1] version :

- [TEST TOOL 2] version :

- [TEST TOOL 3] version :

SPECIFIC REQUIREMENTS

- Requirement 1 :

- Requirement 2 :

03 - Reporting

Report Test Session Process

Objectives

- The objective of the test session reporting are double :

- provide the test session participant with a report of the outcome of the participation of the SUT in the test session.

- provide the certification body with a report of the test session.

Pre-requisites

- The test session is closed and neither the participants nor the monitors can update or change any information on the tests performed during the session. All required test were graded by the monitors was performed during the test session and grading of the SUT was performed by the Test Session Manager

Inputs

- Inputs are the elements of testing (logs, reports, annotation, bug reports) that were recorded during the test session

- Survey filled out by testers

Actions

- The Test Session Manager generates and signs the SUT test report using the Gazelle Test Management application

- The Test Session Manager writes a report to the certification body. The reports includes KPI about the test session

Outputs

- Test session report covered under accreditation send to the certification body

- SUT test reports, signed by Lab Manager send to each SUT operators and to the certification body

All outputs are distributed under their electronic version (signed PDF). The electronic version of the document prevails

Staff Qualification

Objectives

Ensure adequation between required skills and availalble skills to endorse specific roles in KEREVAL Health lab. Identify nominatively who is allowed to play this role.

Qualified roles

The Laboratory Chart identifies roles for which qualification is required.

For a test session

The required level of skills (knowledge and experience) is established by the Lab manager. He is then in charge of analysing, assessing and qualifying candidates for a role on the basis of their autodeclaration.

A candidate is then qualified with an assessed level : With Coaching, Autonomous, Expert. With Coaching means that the candidate can act within his/her role, but with an expert coaching.

Qualification is valid for one test session.

Test session manager

A test session manager is a resource acting on the behalf of KEREVAL Health Lab.

He/she must be ISTQB fondation certified, autonomous on this job, autonomous on skills in all Health test tools domain and IHE standards.

Monitor

A monitor is a resource acting on the behalf of KEREVAL Health Lab.

Monitors are recruited within KEREVAL team for on-line testing session, but can be recruited outside KEREVAL team for mobile testing session (see connectathon monitor's documentation). They must fill in a form with their skills (e.g. : monitor's form for Bern Connectathon). Independance and potential conflict of interest are checked.

SUT operator

SUT operators must be qualified to take part to a test session covered by the accreditation: they must have good knowledge and sufficient experience to be autonomous on SUT, IHE and Test tools.

Knowledge and experience are collected before such a test session.

Other roles involved in KEREVAL Health Lab

The required level of skills is established on the basis of KEREVAL experience related to other labs. Skills for lab manager, his substitue and auditors are assessed compared with established criteria.

Qualification is valid for one year.

Note : Lab Manager and substitute : they are the only ones allowed to sign test reports

| Lab Manager | Eric POISEAU | Assessed level : | Expert | helped & controlled on QMS practices by RBE |

| Lab manager substitute | Alain RIBAULT | Assessed level : | With coaching | technically helped by the test tool development team |

| Auditors | ||||

| management part | Raphaëlle BATOGE | Assessed level : | Expert | |

| technical part | Alain RIBAULT | Assessed level : | Expert | |

| technical part | Thomas DOLOUE | Assessed level : | With coaching | coached by the other auditors |

Project Management

This process is inspired from SCRUM, an agile project management method, where the Scrummaster is the test tools development project manager.

This process is largely tooled, based on Jira.

Quality Management

Quality of KEREVAL HEalth Lab activities is continously monitored with 3 main tools :

Management of non-conforming Works

or how to detect eventual non-conforming works, identify impacts on our test results and take appropriate decisions.

KPI monitoring

or how to follow and monitor Key Performance Indicators and warnings on committments of the lab, test activities, on level of resoruces...

KPI are defined in the contract with the customer of the lab, IHE-EUrope.

On a monthly basis, Quality engineer is in charge of collecting and reporting these KPI. The lab manager gives his/her analysis.

In case of major findings between planned and actual data, actions could be taken either with IHE-Europe, or internally at the top level management of KEREVAL.

References monitoring

or how to ensure that the lab will always use the up-to-date and applcable reference in terms of testing.

The lab and its staff commit themselves to always use the correct version of applicable methods and reference material related to tested profiles.

The lab manager is always aware of any modification concerning tested profiles.

General references of methods ([GITB], [HITCH], [17025], [ISTQB]) are always checked as input of Quality committee.

In case of modification of one of these references, an impact analysis is performed by the quality Manager and/or the Lab manager, in order to decide appropriate actions to take into account these modifications (process modification, new training, new qualification...).

Non conforming Works

Definition

A non-conforming work is a test session, based on a contractual framework, which is not performed in conformance with the rules of the lab (procedures, quality manual...). The test session process is in particular followed. In case of KEREVAL Health Lab, non-conforming works that potentially affect the test results are targeted.

Applicable scope

Any issue that could occur regarding the different elements that compose the test platform (developped or used by KEREVAL Health lab, or IHE test plans, provided by the IHE test design team).

Objectives

- Detect as soon as possible eventual non-conforming works

- Identify impact of this non-conformances on test results (already delivered or currently in progress)

- Make able appropriate decisions taking, in order to avoid or reduce such impact

Prerequisites

Several warnings of a potential non-conforming work :

- either a defect is detected on any test tool, during development or during a test session

- or the test session process is not fully respected (such finding can be detected during internal quality checks)

- or a claim from a customer is raised, and approved by the CTO

- or a known issue on a test case is raised

Inputs

-

Issues, in JIRA

or

-

Finding in the applied process, documented in a Quality report

or

-

Customer claim, in TUTOS

Actions

-

In case of an issue created in JIRA, the test tool development manager applies the test tool development process. An impact analysis is documented directly in the JIRA issue, including a dedicated analysis on potential impact on test results.

-

In case of impact on test results (current or already provided), the test tool developpment manager raises the issue to the lab manager.

-

In case of a non respect of the test session process, detected by any quality check, review or internal audit, the finding is raised to the lab manager, who analyses potential reasons and impacts.

-

In case of a customer claim, the lab manager analyses the potential causes and reasons.

-

In case of an issue on IHE test cases, any report containing this test case, provided before the detection, remains still valid. Anyway,KEREVAL Health lab will keep informed the concerned customer.

-

If a non-conforming work is confirmed by the lab manager after such analyses, he keeps the quality manager informed, who will create a TUTOS incident (category : "travail non-conforme/non-conforming work" and gravity is assessed (Léger/Light | Important/Important | Bloquant/Blocking).

-

The Quality Manager keeps the Sales manager and the CTO informed during production review, or directly if the incident is "blocking".

-

The Top Level Management committee decides the action that must be taken.

- Several types of actions are possible, according to the level of importance and its frequency of occurence :

- communication or not to concerned customers

- new run or not of the concerned tests

- decide or not of a commercial action to satisfy the customer

- decide or not to review the staff qualification

- decide or not of an improvment or corrective action on test tools development practices.

Outputs

- TUTOS incidents, category : travail non-conforme/non-conforming work, on IHE projects

Closure criteria

- TUTOS incidents, category : travail non-conforme/non-conforming work, on IHE projects, are closed

Tools

- TUTOS

- JIRA

Terms and abbrevations

Abbrevations

C

- CAT : Connectathon

- CDA : Clinical Document Architecture

E

- EVS : External Validation Services

G

- GMM : Gazelle Master Model

I

- IHE : Integrating the Healthcare Enterprise

P

- PAT : Projectathon

- PDT : Test Plan

S

- SUT : System Under Test

T

- TM : Test Management

X

- XDS : Cross Enterprise Document Sharing

Glossary

B

- Benchmark Test : A standard against which measurements or comparisons can be made. A test that is be used to compare components or systems to each other or to a standard

- Beta testing : Operational testing by potential and/or existing users/customers at an external site not otherwise involved with the developers, to determine whether or not a compone nt or system satisfies the user/customer needs and fits within the business processes. Beta testing is often employed as a form of external acceptance testing in order to acquire feedback from the market

- Black box testing : Testing, either functional or non-functional, without reference to the internal structure of the component or system

- Black box test design techniques : Documented procedure to derive and select test cases based on an analysis of the specification, either functional or non-functional, of a component or system without reference to its internal structure

- Blocked test case : A test case that cannot be executed because the preconditions for its execution are not fulfilled

C

- Certification : The process of confirming that a component, system or person complies with its specified requirements, e.g. by passing an exam

- Code coverage : An analysis method that determines which parts of the software have been executed (covered) by the test suite and which parts have not been executed, e.g. statement coverage, decision coverage or condition coverage

- Compliance : The capability of the software product to adhere to standards, conventions or regulations in laws and similar prescriptions

- Component : A minimal software item that can be tested in isolation

- Coverage : The degree, expressed as a percentage, to which a specified coverage item has been exercised by a test suite

D

- Defect : A flaw in a component or system that can cause the component or system to fail to perform its required function, e.g. an incorrect statement or data definition. A defect, if encountered during execution, may cause a failure of the component or system

- Driver : A software component or test tool that replaces a component that takes care of the control and/or the calling of a component or system

- Dynamic analysis : The process of evaluating behavior, e.g. memory performance, CPU usage, of a system or component during execution

- Dynamic testing : Testing that involves the execution of the software of a component or system

E

- Exit criteria : The set of generic and specific conditions, agreed upon with the stakeholders, for permitting a process to be officially completed. The purpose of exit criteria is to prevent a task from being considered completed when there are still outstanding parts of the task which have not been finished. Exit criteria are used by testing to report against and to plan when to stop testing

- Expected result : he behavior predicted by the specification, or another source, of the component or system under specified conditions

F

- Fail : A test is deemed to fail if its actual result does not match its expected result

- Failure : Actual deviation of the component or system from its expected delivery, service or result. [After Fenton] The inability of a system or system component to perform a required function within specified limits. A failure may be produced when a fault is encountered

- Functional requirement : A requirement that specifies a function that a component or system must perform

I

- Impact analysis : The assessment of change to the layers of development documentation, test documentation and components, in order to implement a given change to specified requirements

- Incident : Any event occurring during testing that requires investigation

- Interoperability : The capability of the software product to interact with one or more specified components or systems

N

- Non-functional requirement : A requirement that does not relate to functionality, but to attributes of such as reliability, efficiency, usability, maintainability and portability

P

- Pass : A test is deemed to pass if its actual result matches its expected result

- Precondition : Environmental and state conditions that must be fulfilled before the component or system can be executed with a particular test or test procedure

R

- Requirement : condition or capability needed by a user to solve a problem or achieve an objective that must be met or possessed by a system or system component to satisfy a contract, standard, specification, or other formally imposed document

S

- Simulator : A device, computer program or system used during testing, which behaves or operates like a given system when provided with a set of controlled inputs

- Static analysis : Analysis of software artifacts, e.g. requirements or code, carried out without execution of these software development artifacts. Static analysis is usually carried out by means of a supporting tool

- Stub : A skeletal or special-purpose implementation of a software component, used to develop or test a component that calls or is otherwise dependent on it. It replaces a called component

T

- Test case : A set of input values, execution preconditions, expected results and execution postconditions, developed for a particular objective or test condition, such as to exercise a particular program path or to verify compliance with a specific requirement

- Test condition : An item or event of a component or system that could be verified by one or more test cases, e.g. a function, transaction, quality attribute, or structural element

- Test level : A group of test activities that are organized and managed together. A test level is linked to the responsibilities in a project. Examples of test levels are component test, integration test, system test and acceptance test

- Test strategy : A high-level document defining the test levels to be performed and the testing within those levels for a programme (one or more projects)

U

- Use case : A sequence of transactions in a dialogue between an actor and a component or system with a tangible result, where an actor can be a user or anything that can exchange information with the system

V

- Validation : Confirmation by examination and through provision of objective evidence that the requirements for a specific intended use or application have been fulfilled

- Verification : Confirmation by examination and through the provision of objective evidence that specified requirements have been fulfilled

W

- White box test design technique : Documented procedure to derive and select test cases based on an analysis of the internal structure of a component or system